Scientists have developed a new algorithm that can make computer animation more agile, acrobatic and realistic. The researchers at University of California, Berkeley in the US used deep reinforcement learning to recreate natural motions, even for acrobatic feats like break dancing and martial arts. The simulated characters can also respond naturally to changes in the environment, such as recovering from tripping or being pelted by projectiles.

Scientists have developed a new algorithm that can make computer animation more agile, acrobatic and realistic. The researchers at University of California, Berkeley in the US used deep reinforcement learning to recreate natural motions, even for acrobatic feats like break dancing and martial arts. The simulated characters can also respond naturally to changes in the environment, such as recovering from tripping or being pelted by projectiles.

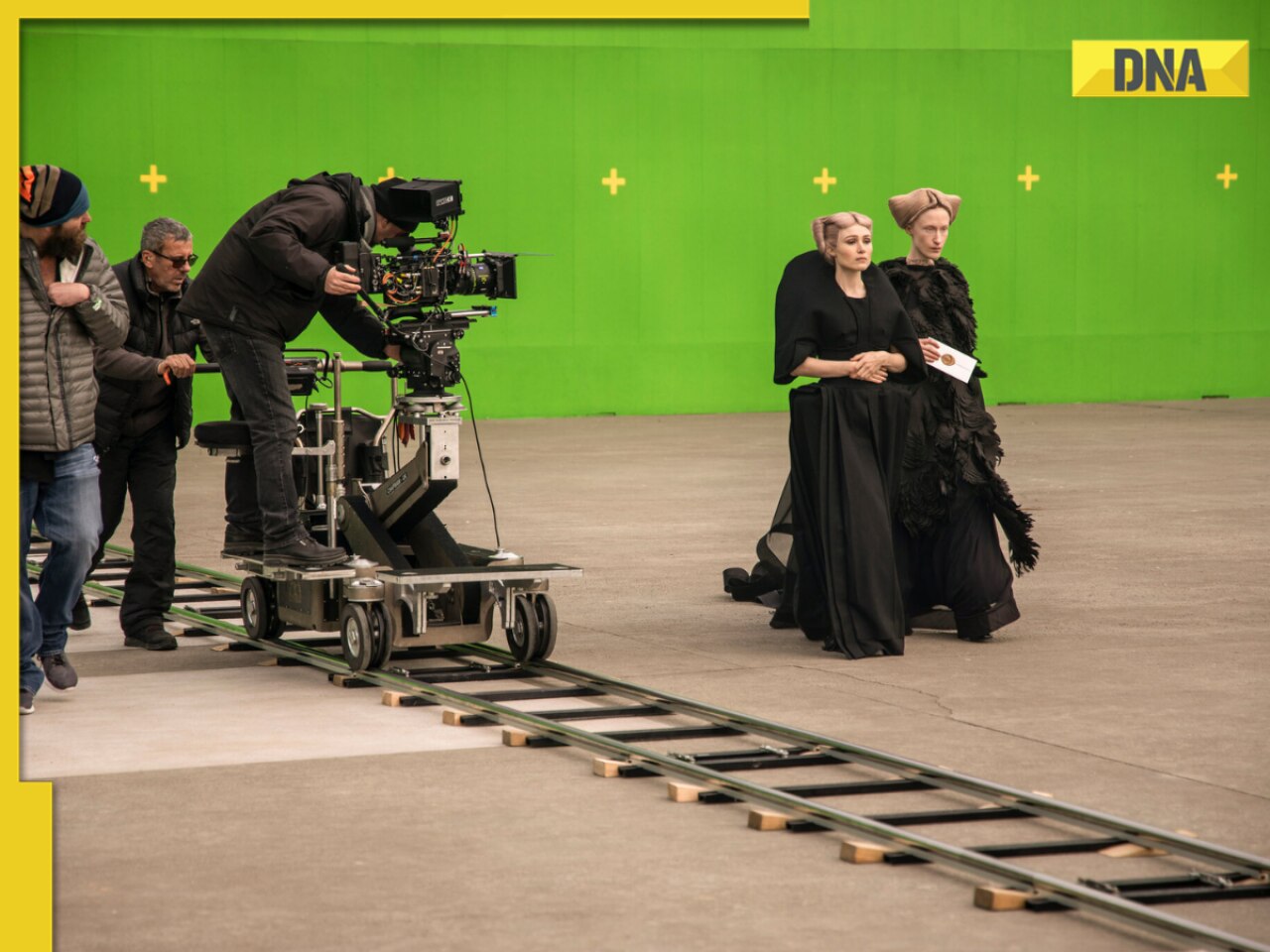

"This is actually a pretty big leap from what has been done with deep learning and animation," said UC Berkeley graduate student Xue Bin Peng. "In the past, a lot of work has gone into simulating natural motions, but these physics-based methods tend to be very specialised; they are not general methods that can handle a large variety of skills," said Peng. Each activity or task typically requires its own custom-designed controller.

"We developed more capable agents that behave in a natural manner," he said. "If you compare our results to motion-capture recorded from humans, we are getting to the point where it is pretty difficult to distinguish the two, to tell what is simulation and what is real. We're moving toward a virtual stuntman," said Peng. The work could also inspire the development of more dynamic motor skills for robots.

Traditional techniques in animation typically require designing custom controllers by hand for every skill: one controller for walking, for example, and another for running, flips and other movements.

These hand-designed controllers can look pretty good, Peng said. Alternatively, deep reinforcement learning methods, such as GAIL, can simulate a variety of different skills using a single general algorithm, but their results often look very unnatural. "The advantage of our work is that we can get the best of both worlds," Peng said. "We have a single algorithm that can learn a variety of different skills, and produce motions that rival if not surpass the state of the art in animation with handcrafted controllers," said Peng.

To achieve this, Peng obtained reference data from motion-capture (mocap) clips demonstrating more than 25 different acrobatic feats, such as backflips, cartwheels, kip-ups and vaults, as well as simple running, throwing and jumping.

After providing the mocap data to the computer, the team then allowed the system - dubbed DeepMimic - to "practice" each skill for about a month of simulated time, a bit longer than a human might take to learn the same skill. The computer practiced 24/7, going through millions of trials to learn how to realistically simulate each skill. It learned through trial and error: comparing its performance after each trial to the mocap data, and tweaking its behaviour to more closely match the human motion.

![submenu-img]() British TV host calls Priyanka Chopra 'Chianca Chop Free', angry fans say 'this is huge disrespect'; video goes viral

British TV host calls Priyanka Chopra 'Chianca Chop Free', angry fans say 'this is huge disrespect'; video goes viral![submenu-img]() Meme dog Kabosu, that inspired Dogecoin, dies

Meme dog Kabosu, that inspired Dogecoin, dies![submenu-img]() Deepika Padukone radiates 'mummy glow', spotted with baby bump in new video, netizens call her 'prettiest mom'

Deepika Padukone radiates 'mummy glow', spotted with baby bump in new video, netizens call her 'prettiest mom'![submenu-img]() India's biggest action film, had 1 hero, 7 villains, became superhit, made for Rs 6 crore, earned over Rs..

India's biggest action film, had 1 hero, 7 villains, became superhit, made for Rs 6 crore, earned over Rs..![submenu-img]() Will your Aadhaar Card become invalid after June 14 if not updated? Here's what UIDAI has to say

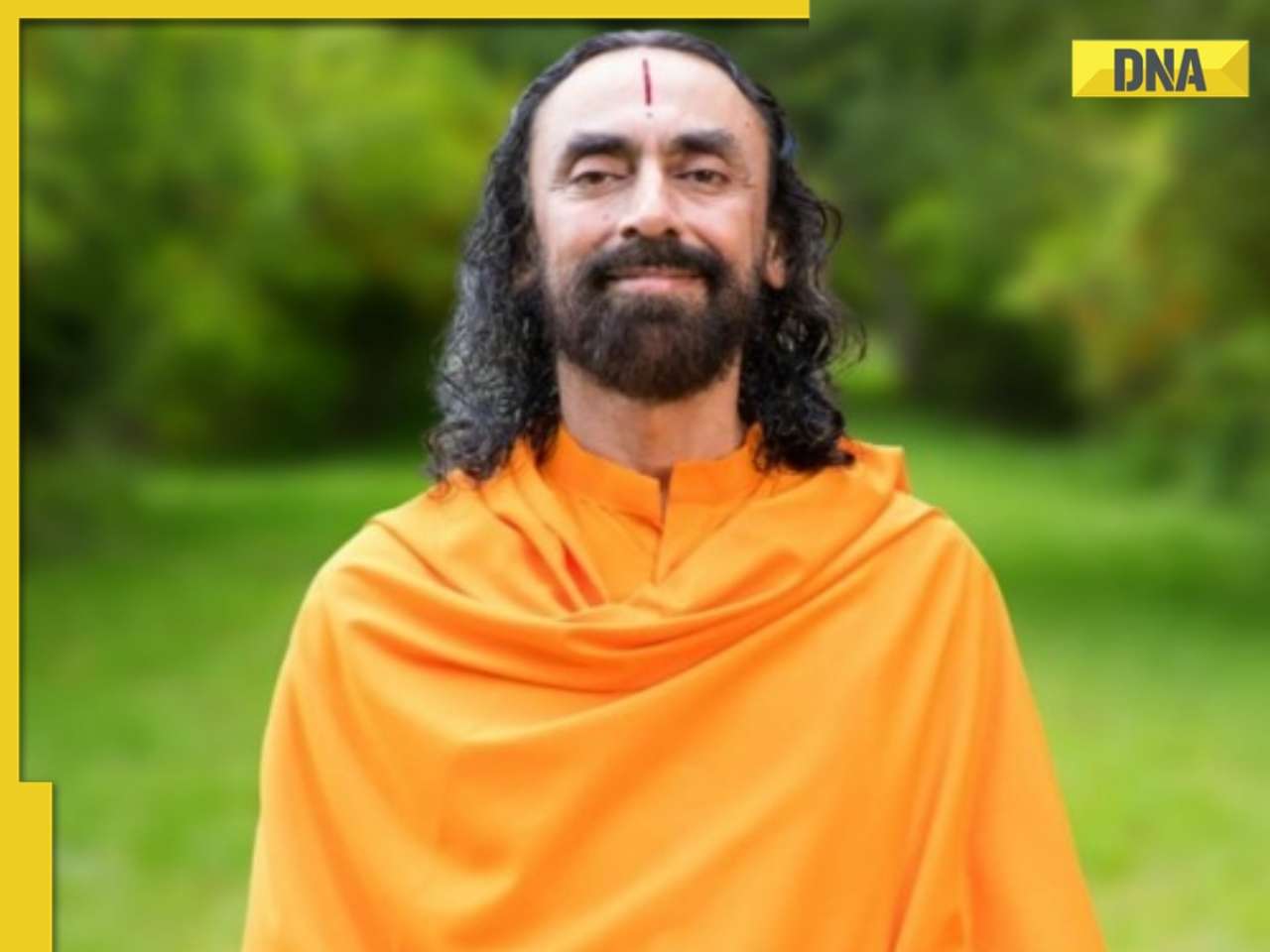

Will your Aadhaar Card become invalid after June 14 if not updated? Here's what UIDAI has to say![submenu-img]() Meet man, IIT Delhi, IIM Calcutta alumnus who quit high-paying job, became a monk due to..

Meet man, IIT Delhi, IIM Calcutta alumnus who quit high-paying job, became a monk due to..![submenu-img]() TBSE Result 2024: Tripura Board Class 10, 12 results DECLARED, direct link here

TBSE Result 2024: Tripura Board Class 10, 12 results DECLARED, direct link here![submenu-img]() Meghalaya Board Result 2024 DECLARED: MBOSE HSSLC Arts results available at megresults.nic.in, direct link here

Meghalaya Board Result 2024 DECLARED: MBOSE HSSLC Arts results available at megresults.nic.in, direct link here![submenu-img]() Meghalaya Board 10th, 12th Results 2024: MBOSE SSLC, HSSLC Arts results releasing today at megresults.nic.in

Meghalaya Board 10th, 12th Results 2024: MBOSE SSLC, HSSLC Arts results releasing today at megresults.nic.in![submenu-img]() Tripura TBSE 2024: Class 10th, 12th results to announce today; know timing, steps to check

Tripura TBSE 2024: Class 10th, 12th results to announce today; know timing, steps to check![submenu-img]() DNA Verified: Is CAA an anti-Muslim law? Centre terms news report as 'misleading'

DNA Verified: Is CAA an anti-Muslim law? Centre terms news report as 'misleading'![submenu-img]() DNA Verified: Lok Sabha Elections 2024 to be held on April 19? Know truth behind viral message

DNA Verified: Lok Sabha Elections 2024 to be held on April 19? Know truth behind viral message![submenu-img]() DNA Verified: Modi govt giving students free laptops under 'One Student One Laptop' scheme? Know truth here

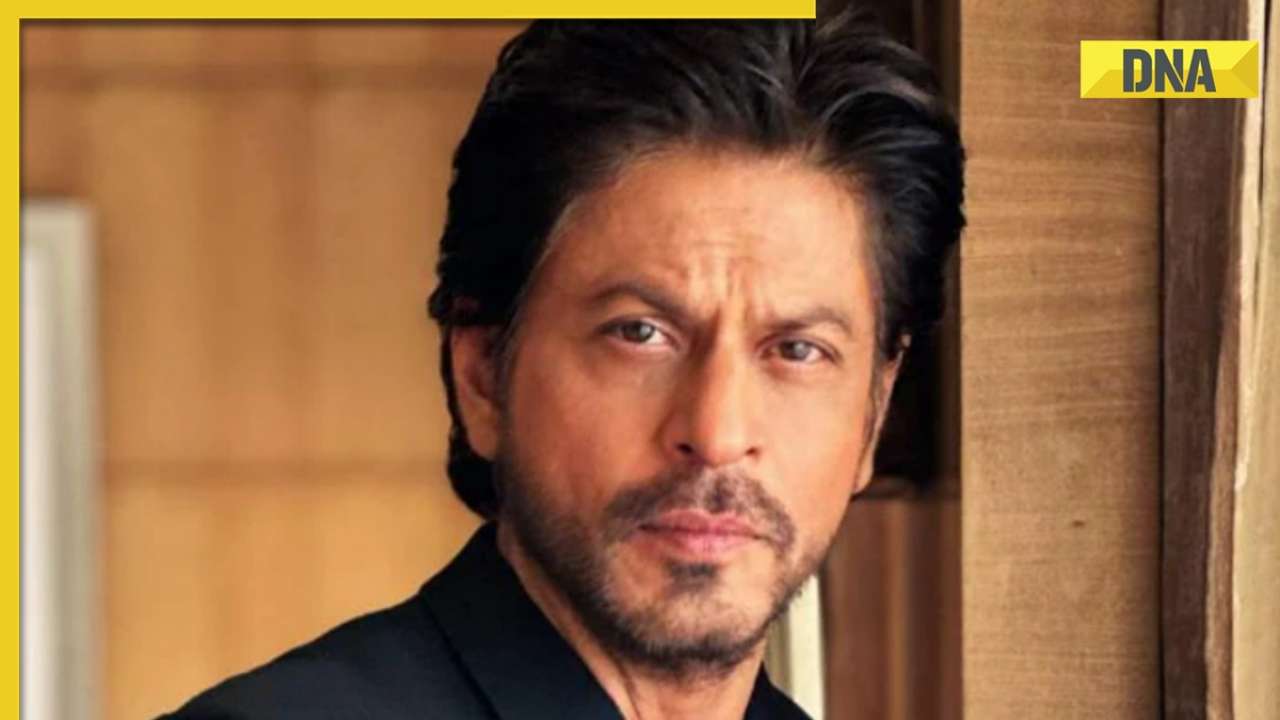

DNA Verified: Modi govt giving students free laptops under 'One Student One Laptop' scheme? Know truth here![submenu-img]() DNA Verified: Shah Rukh Khan denies reports of his role in release of India's naval officers from Qatar

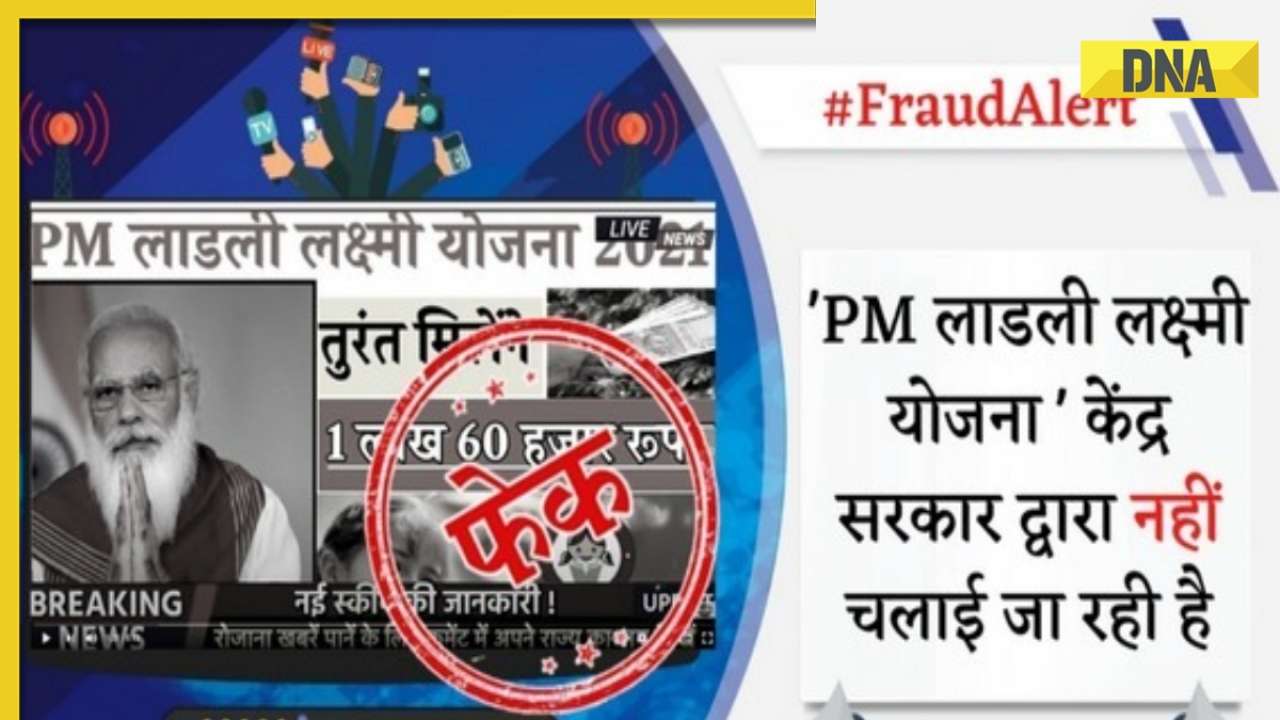

DNA Verified: Shah Rukh Khan denies reports of his role in release of India's naval officers from Qatar![submenu-img]() DNA Verified: Is govt providing Rs 1.6 lakh benefit to girls under PM Ladli Laxmi Yojana? Know truth

DNA Verified: Is govt providing Rs 1.6 lakh benefit to girls under PM Ladli Laxmi Yojana? Know truth![submenu-img]() Assamese actress Aimee Baruah wins hearts as she represents her culture in saree with 200-year-old motif at Cannes

Assamese actress Aimee Baruah wins hearts as she represents her culture in saree with 200-year-old motif at Cannes ![submenu-img]() Aditi Rao Hydari's monochrome gown at Cannes Film Festival divides social media: 'We love her but not the dress'

Aditi Rao Hydari's monochrome gown at Cannes Film Festival divides social media: 'We love her but not the dress'![submenu-img]() AI models play volley ball on beach in bikini

AI models play volley ball on beach in bikini![submenu-img]() AI models set goals for pool parties in sizzling bikinis this summer

AI models set goals for pool parties in sizzling bikinis this summer![submenu-img]() In pics: Aditi Rao Hydari being 'pocket full of sunshine' at Cannes in floral dress, fans call her 'born aesthetic'

In pics: Aditi Rao Hydari being 'pocket full of sunshine' at Cannes in floral dress, fans call her 'born aesthetic'![submenu-img]() DNA Explainer: Why was Iranian president Ebrahim Raisi, killed in helicopter crash, regarded as ‘Butcher of Tehran’?

DNA Explainer: Why was Iranian president Ebrahim Raisi, killed in helicopter crash, regarded as ‘Butcher of Tehran’?![submenu-img]() DNA Explainer: Why did deceased Iranian President Ebrahim Raisi wear black turban?

DNA Explainer: Why did deceased Iranian President Ebrahim Raisi wear black turban?![submenu-img]() Iran President Ebrahim Raisi's death: Will it impact gold, oil prices and stock markets?

Iran President Ebrahim Raisi's death: Will it impact gold, oil prices and stock markets?![submenu-img]() Haryana Political Crisis: Will 3 independent MLAs support withdrawal impact the present Nayab Saini led-BJP government?

Haryana Political Crisis: Will 3 independent MLAs support withdrawal impact the present Nayab Saini led-BJP government?![submenu-img]() DNA Explainer: Why Harvey Weinstein's rape conviction was overturned, will beleaguered Hollywood mogul get out of jail?

DNA Explainer: Why Harvey Weinstein's rape conviction was overturned, will beleaguered Hollywood mogul get out of jail?![submenu-img]() British TV host calls Priyanka Chopra 'Chianca Chop Free', angry fans say 'this is huge disrespect'; video goes viral

British TV host calls Priyanka Chopra 'Chianca Chop Free', angry fans say 'this is huge disrespect'; video goes viral![submenu-img]() Deepika Padukone radiates 'mummy glow', spotted with baby bump in new video, netizens call her 'prettiest mom'

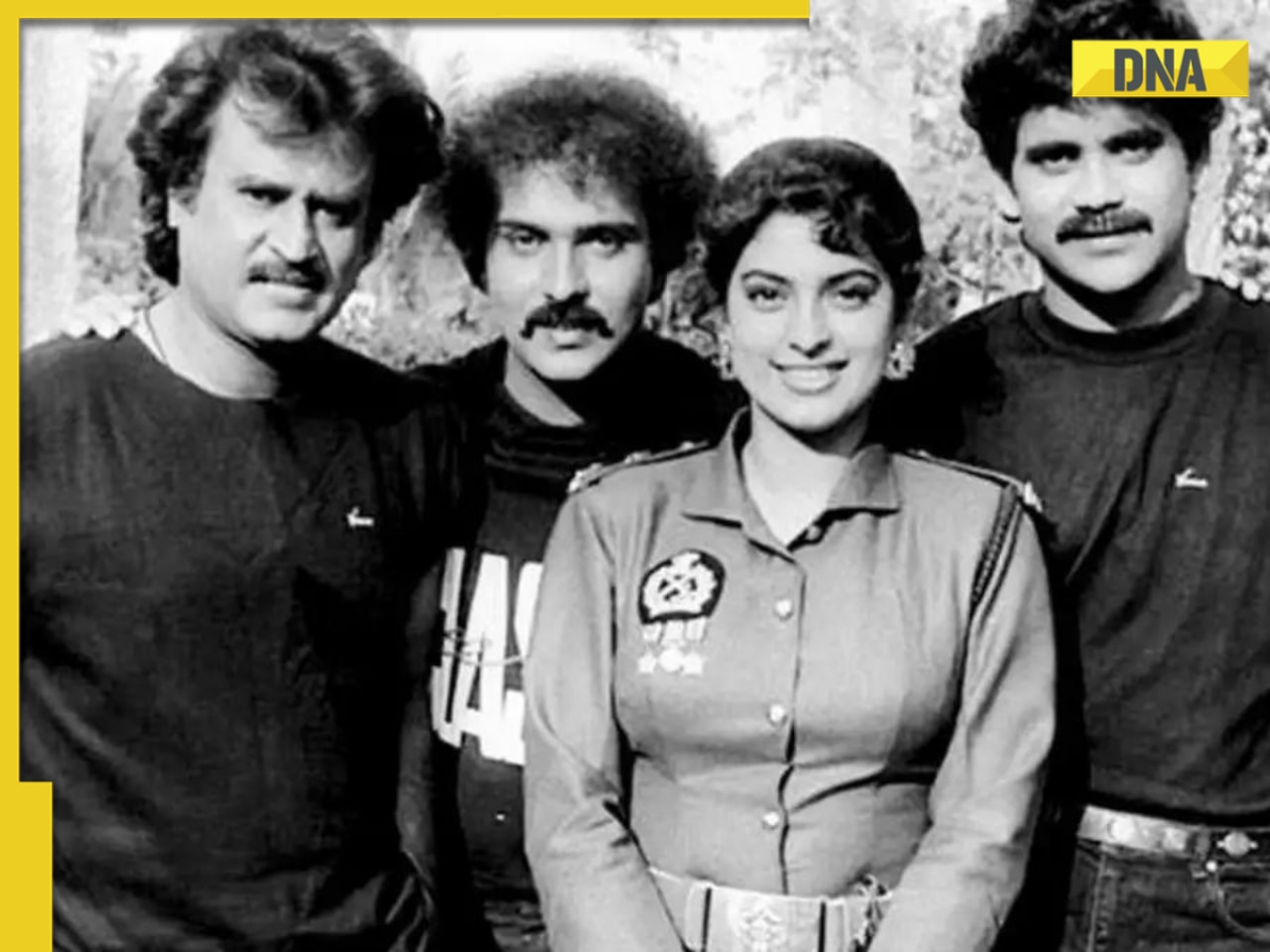

Deepika Padukone radiates 'mummy glow', spotted with baby bump in new video, netizens call her 'prettiest mom'![submenu-img]() India's biggest action film, had 1 hero, 7 villains, became superhit, made for Rs 6 crore, earned over Rs..

India's biggest action film, had 1 hero, 7 villains, became superhit, made for Rs 6 crore, earned over Rs..![submenu-img]() Neha Sharma says having morals 'doesn't take you very far' in Bollywood: 'Clearly why I am not...' | Exclusive

Neha Sharma says having morals 'doesn't take you very far' in Bollywood: 'Clearly why I am not...' | Exclusive![submenu-img]() This iconic film was made on suggestion by former Prime Minister, was rejected by Rajesh Khanna, Shashi Kapoor, earned..

This iconic film was made on suggestion by former Prime Minister, was rejected by Rajesh Khanna, Shashi Kapoor, earned..![submenu-img]() Meme dog Kabosu, that inspired Dogecoin, dies

Meme dog Kabosu, that inspired Dogecoin, dies![submenu-img]() Viral Video: Turtles flip over stranded friend in heartwarming rescue, internet hearts it

Viral Video: Turtles flip over stranded friend in heartwarming rescue, internet hearts it![submenu-img]() Shocking! Woman discovers intruder living in her bedroom for four months, details inside

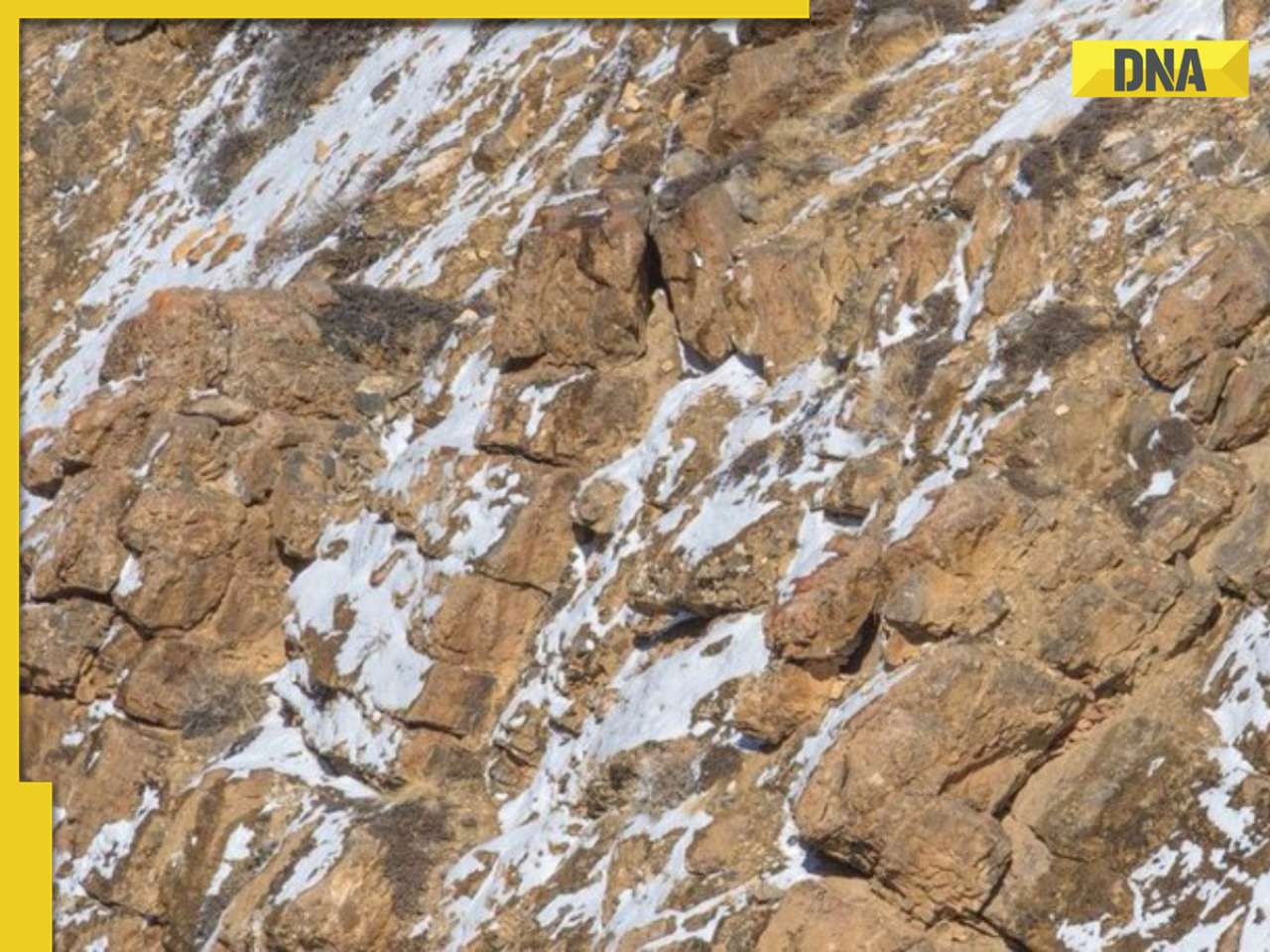

Shocking! Woman discovers intruder living in her bedroom for four months, details inside![submenu-img]() Can you spot 'ghost of the mountain'? Internet stumped by camouflaged snow leopard

Can you spot 'ghost of the mountain'? Internet stumped by camouflaged snow leopard![submenu-img]() Viral video: Women engage in physical altercation over Rs 100 dispute at medical shop

Viral video: Women engage in physical altercation over Rs 100 dispute at medical shop

)

)

)

)

)

)

)