Google announced it would not use artificial intelligence for weapons or to "cause or directly facilitate injury to people," as it unveiled a set of principles for these technologies.

Google announced it would not use artificial intelligence for weapons or to "cause or directly facilitate injury to people," as it unveiled a set of principles for these technologies.

Chief executive Sundar Pichai, in a blog post outlining the company's artificial intelligence policies, noted that even though Google won't use AI for weapons, "we will continue our work with governments and the military in many other areas" including cybersecurity, training, and search and rescue.

The news comes with Google facing pressure from employees and others over a contract with the US military, which the California tech giant said last week would not be renewed.

Recently, unnamed sources as saying that a Google's cloud team executive announced told employees that the company would not seek to renew the controversial contract after it expires next year. The contract was reported to be worth less than $10 million to Google, but was thought to have potential to lead to more lucrative technology collaborations with the military.

Google did not respond to a request for comment. Google has remained mum about Project Maven, which reportedly uses machine learning and engineering talent to distinguish people and objects in drone videos for the Defense Department. "We believe that Google should not be in the business of war," the employee petition reads, according to copies posted online.

"Therefore, we ask that Project Maven be cancelled, and that Google draft, publicize and enforce a clear policy stating that neither Google nor its contractors will ever build warfare technology." The Electronic Frontier Foundation, an internet rights group, and the International Committee for Robot Arms Control (ICRAC) were among those who have weighed in with support. "As military commanders come to see the object recognition algorithms as reliable, it will be tempting to attenuate or even remove human review and oversight for these systems," ICRAC said in an open letter.

With inputs from AFP Relax News

![submenu-img]() Balancing Risk and Reward: Tips and Tricks for Good Mobile Trading

Balancing Risk and Reward: Tips and Tricks for Good Mobile Trading![submenu-img]() Balmorex Pro [Is It Safe?] Real Customers Expose Hidden Dangers

Balmorex Pro [Is It Safe?] Real Customers Expose Hidden Dangers![submenu-img]() Sight Care Reviews (Real User EXPERIENCE) Ingredients, Benefits, And Side Effects Of Vision Support Formula Revealed!

Sight Care Reviews (Real User EXPERIENCE) Ingredients, Benefits, And Side Effects Of Vision Support Formula Revealed!![submenu-img]() Java Burn Reviews (Weight Loss Supplement) Real Ingredients, Benefits, Risks, And Honest Customer Reviews

Java Burn Reviews (Weight Loss Supplement) Real Ingredients, Benefits, Risks, And Honest Customer Reviews![submenu-img]() Gurucharan Singh is still unreachable after returning home, says Taarak Mehta producer Asit Modi: 'I have been trying..'

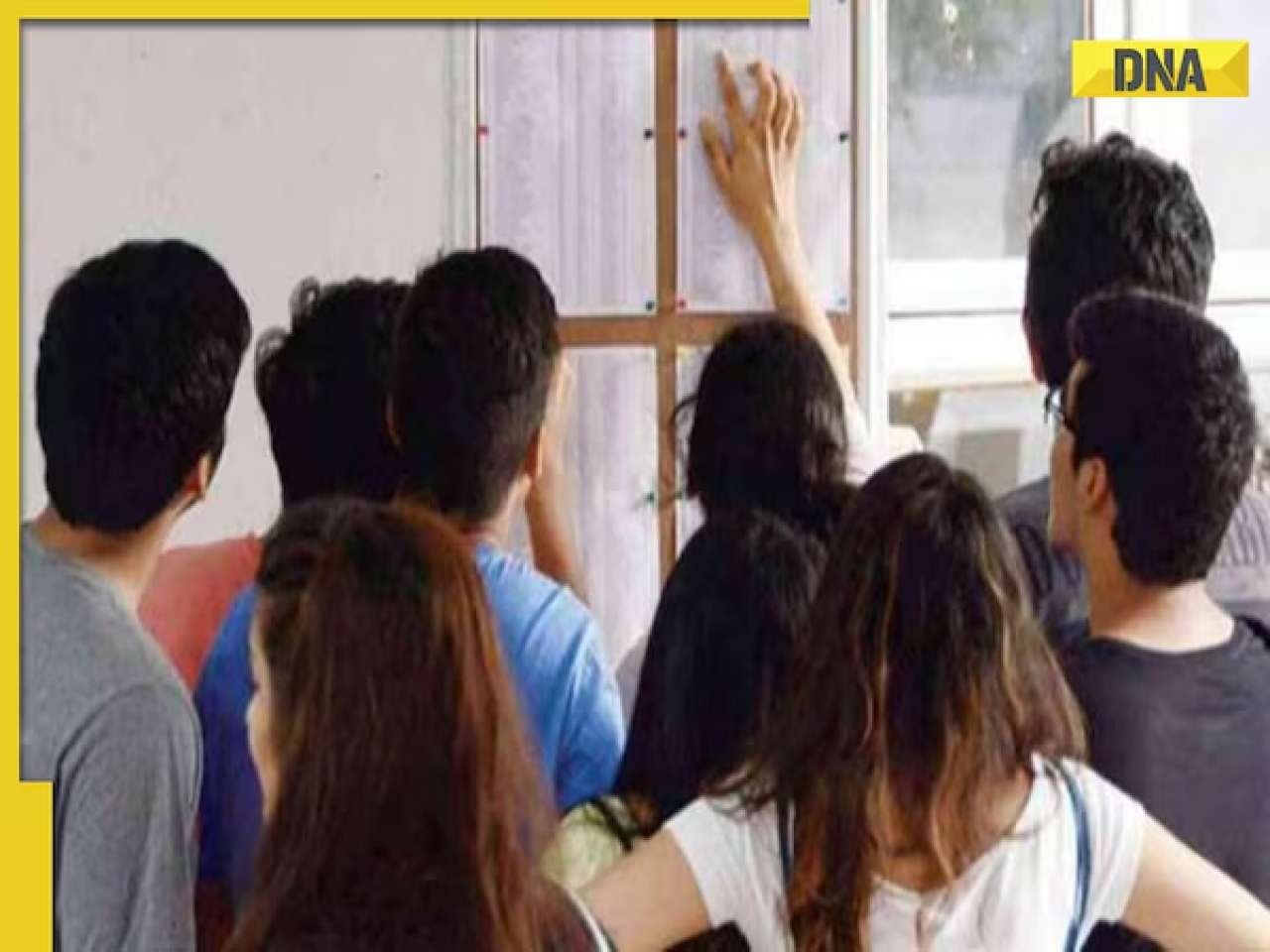

Gurucharan Singh is still unreachable after returning home, says Taarak Mehta producer Asit Modi: 'I have been trying..'![submenu-img]() RBSE 12th Result 2024 Live Updates: Rajasthan Board Class 12 results DECLARED, get direct link here

RBSE 12th Result 2024 Live Updates: Rajasthan Board Class 12 results DECLARED, get direct link here![submenu-img]() IIT graduate Indian genius ‘solved’ 161-year old maths mystery, left teaching to become CEO of…

IIT graduate Indian genius ‘solved’ 161-year old maths mystery, left teaching to become CEO of…![submenu-img]() RBSE 12th Result 2024 Live Updates: Rajasthan Board Class 12 results to be announced soon, get direct link here

RBSE 12th Result 2024 Live Updates: Rajasthan Board Class 12 results to be announced soon, get direct link here![submenu-img]() Meet doctor who cracked UPSC exam to become IAS officer but resigned after few years due to...

Meet doctor who cracked UPSC exam to become IAS officer but resigned after few years due to...![submenu-img]() IIT graduate gets job with Rs 45 crore salary package, fired after few years, buys Narayana Murthy’s…

IIT graduate gets job with Rs 45 crore salary package, fired after few years, buys Narayana Murthy’s…![submenu-img]() DNA Verified: Is CAA an anti-Muslim law? Centre terms news report as 'misleading'

DNA Verified: Is CAA an anti-Muslim law? Centre terms news report as 'misleading'![submenu-img]() DNA Verified: Lok Sabha Elections 2024 to be held on April 19? Know truth behind viral message

DNA Verified: Lok Sabha Elections 2024 to be held on April 19? Know truth behind viral message![submenu-img]() DNA Verified: Modi govt giving students free laptops under 'One Student One Laptop' scheme? Know truth here

DNA Verified: Modi govt giving students free laptops under 'One Student One Laptop' scheme? Know truth here![submenu-img]() DNA Verified: Shah Rukh Khan denies reports of his role in release of India's naval officers from Qatar

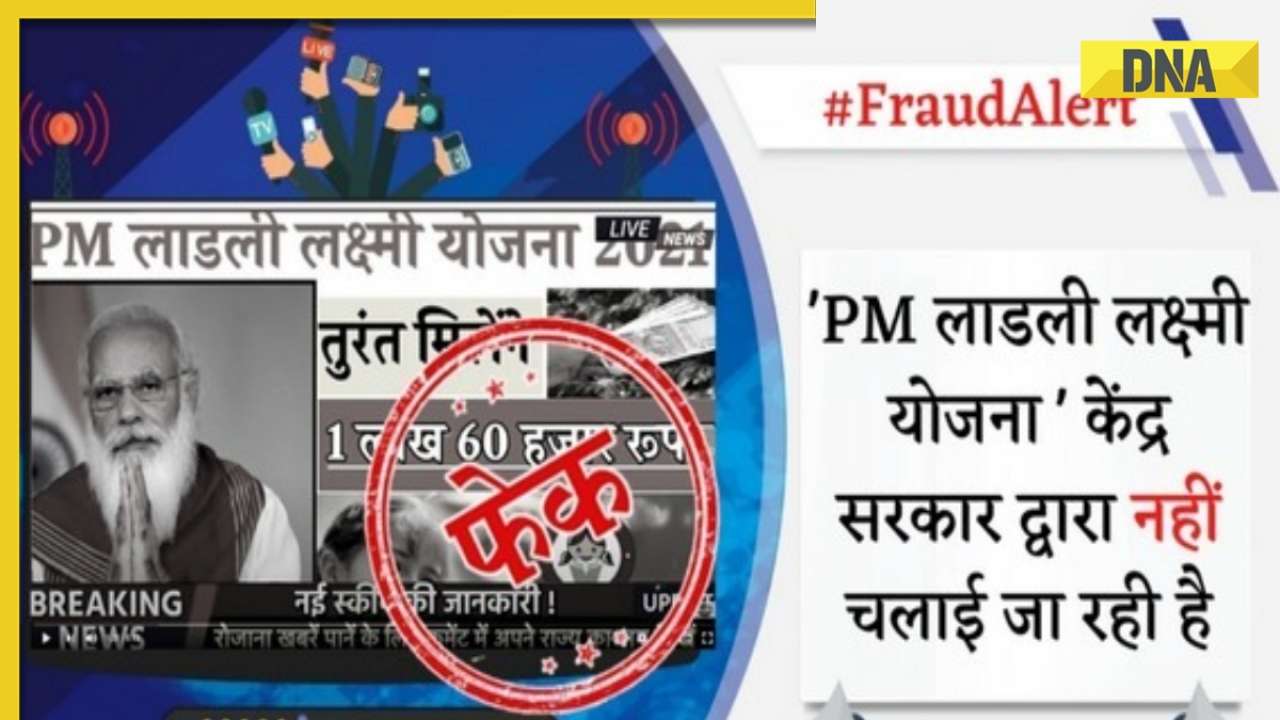

DNA Verified: Shah Rukh Khan denies reports of his role in release of India's naval officers from Qatar![submenu-img]() DNA Verified: Is govt providing Rs 1.6 lakh benefit to girls under PM Ladli Laxmi Yojana? Know truth

DNA Verified: Is govt providing Rs 1.6 lakh benefit to girls under PM Ladli Laxmi Yojana? Know truth![submenu-img]() Urvashi Rautela mesmerises in blue celestial gown, her dancing fish necklace steals the limelight at Cannes 2024

Urvashi Rautela mesmerises in blue celestial gown, her dancing fish necklace steals the limelight at Cannes 2024![submenu-img]() Kiara Advani attends Women In Cinema Gala in dramatic ensemble, netizens say 'who designs these hideous dresses'

Kiara Advani attends Women In Cinema Gala in dramatic ensemble, netizens say 'who designs these hideous dresses'![submenu-img]() Influencer Diipa Büller-Khosla looks 'drop dead gorgeous' in metallic structured dress at Cannes 2024

Influencer Diipa Büller-Khosla looks 'drop dead gorgeous' in metallic structured dress at Cannes 2024![submenu-img]() Kiara Advani stuns in Prabal Gurung thigh-high slit gown for her Cannes debut, poses by the French Riviera

Kiara Advani stuns in Prabal Gurung thigh-high slit gown for her Cannes debut, poses by the French Riviera![submenu-img]() Heeramandi star Taha Shah Badussha makes dashing debut at Cannes Film Festival, fans call him ‘international crush’

Heeramandi star Taha Shah Badussha makes dashing debut at Cannes Film Festival, fans call him ‘international crush’![submenu-img]() Haryana Political Crisis: Will 3 independent MLAs support withdrawal impact the present Nayab Saini led-BJP government?

Haryana Political Crisis: Will 3 independent MLAs support withdrawal impact the present Nayab Saini led-BJP government?![submenu-img]() DNA Explainer: Why Harvey Weinstein's rape conviction was overturned, will beleaguered Hollywood mogul get out of jail?

DNA Explainer: Why Harvey Weinstein's rape conviction was overturned, will beleaguered Hollywood mogul get out of jail?![submenu-img]() What is inheritance tax?

What is inheritance tax?![submenu-img]() DNA Explainer: What is cloud seeding which is blamed for wreaking havoc in Dubai?

DNA Explainer: What is cloud seeding which is blamed for wreaking havoc in Dubai?![submenu-img]() DNA Explainer: What is Israel's Arrow-3 defence system used to intercept Iran's missile attack?

DNA Explainer: What is Israel's Arrow-3 defence system used to intercept Iran's missile attack?![submenu-img]() Gurucharan Singh is still unreachable after returning home, says Taarak Mehta producer Asit Modi: 'I have been trying..'

Gurucharan Singh is still unreachable after returning home, says Taarak Mehta producer Asit Modi: 'I have been trying..'![submenu-img]() ‘Jo mujhse bulwana chahte ho…’: Angry Dharmendra lashes out after casting his vote in Lok Sabha Elections 2024

‘Jo mujhse bulwana chahte ho…’: Angry Dharmendra lashes out after casting his vote in Lok Sabha Elections 2024![submenu-img]() Deepika Padukone spotted with her baby bump as she steps out with Ranveer Singh to cast her vote in Lok Sabha elections

Deepika Padukone spotted with her baby bump as she steps out with Ranveer Singh to cast her vote in Lok Sabha elections![submenu-img]() Jr NTR surprises fans on birthday, announces NTR 31 with Prashanth Neel, shares details

Jr NTR surprises fans on birthday, announces NTR 31 with Prashanth Neel, shares details ![submenu-img]() 86-year-old Shubha Khote wins hearts by coming out to cast her vote in Lok Sabha elections, says meant to inspire voters

86-year-old Shubha Khote wins hearts by coming out to cast her vote in Lok Sabha elections, says meant to inspire voters![submenu-img]() Watch viral video: Man gets attacked after trying to touch ‘pet’ cheetah; netizens react

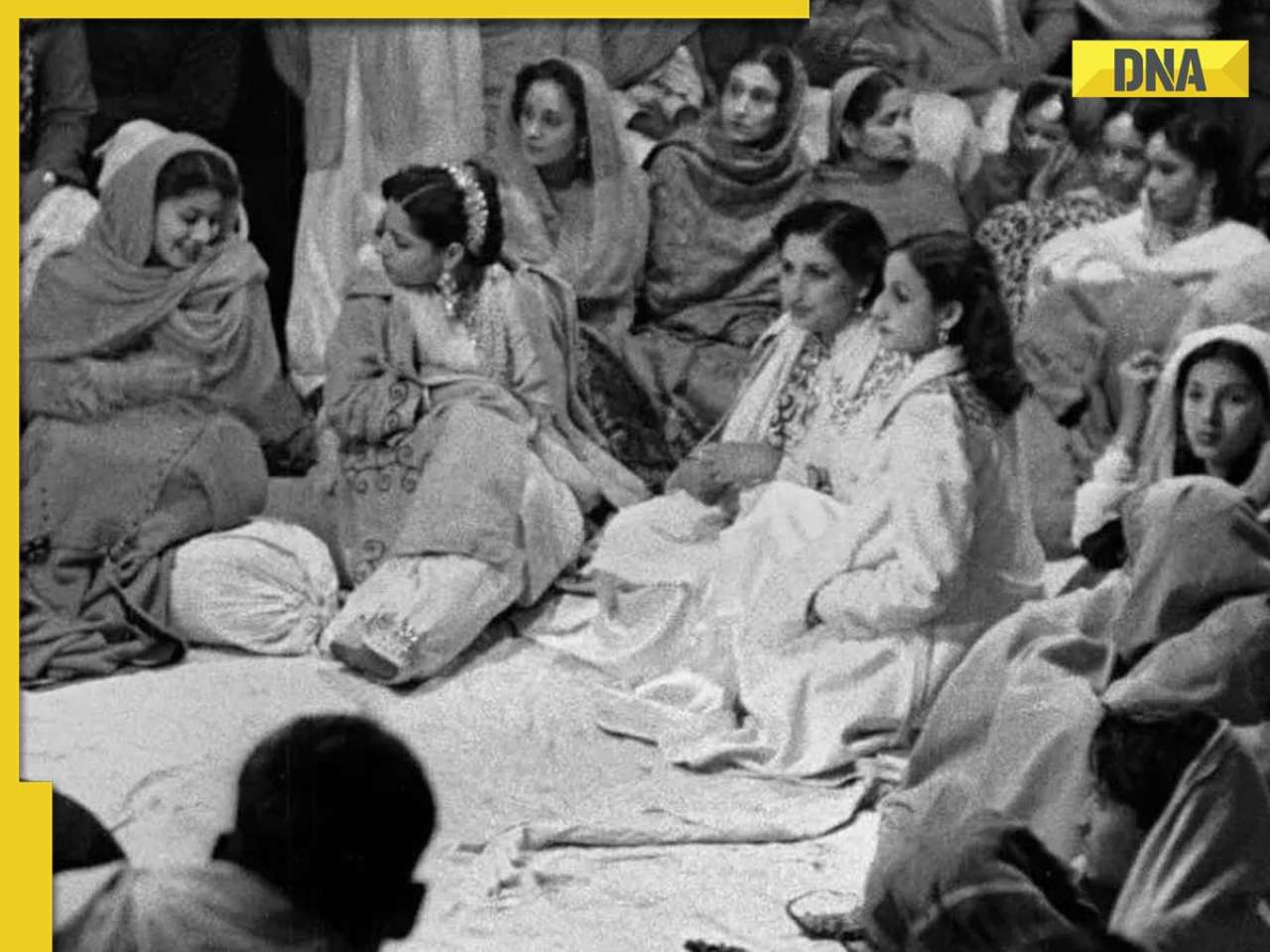

Watch viral video: Man gets attacked after trying to touch ‘pet’ cheetah; netizens react![submenu-img]() Real story of Lahore's Heermandi that inspired Netflix series

Real story of Lahore's Heermandi that inspired Netflix series![submenu-img]() 12-year-old Bengaluru girl undergoes surgery after eating 'smoky paan', details inside

12-year-old Bengaluru girl undergoes surgery after eating 'smoky paan', details inside![submenu-img]() Viral video: Pakistani man tries to get close with tiger and this happens next

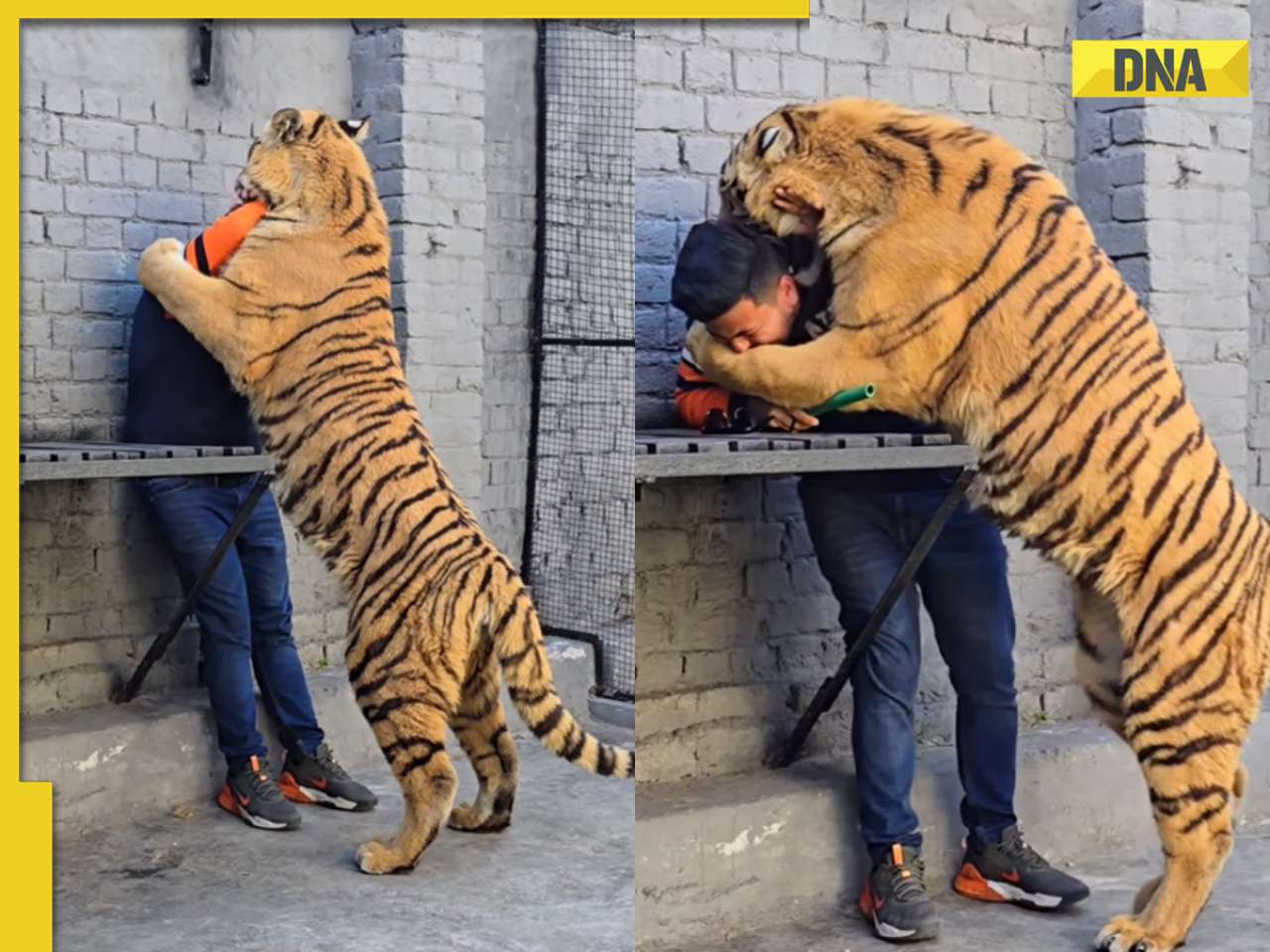

Viral video: Pakistani man tries to get close with tiger and this happens next![submenu-img]() Owl swallows snake in one go, viral video shocks internet

Owl swallows snake in one go, viral video shocks internet

)

)

)

)

)

)

)