For decades now, there has been an ongoing struggle between the masses and their governments over regulating the seemingly vast world wide web. While freedom activist, Julian Assange and Edward Snowden ergo, have demanded the internet remain free, 'the powers that be' have dedicated their efforts to controlling and monitoring it.

And despite the many ethical conflicts and some illegal spying, the web has largely remained unrestrained. Or so we believe.

Earlier this month, Google reported one of its users who possessed child abuse imagery on his Gmail account. And even as most cheered Google on, for their "heroic" act, what everyone was really thinking about was, "How often does Google go through our emails searching for objectionable content?"

Their answer—we don't! Or at least, "Not really"

So then how do they catch the bad guys?

Well, turns out, the tech giants—Google, Facebook, Microsoft and others, have a nexus. But its not your regular nexus of evil, the NSA scandal notwithstanding. This is one of the good kind of collaborations, called the Technology Coalition.

In 2009, Microsoft, along with Dartmouth College, had developed a new technology that would help identify and remove some the "worst of the worst" images of child sexual exploitations from the Internet. Being the good guys that they are, they shared this technology with their fellow tech giants and even the National Center for Missing & Exploited Children (NCMEC) in the US.

Dubbed as PhotoDNA, it solely works to find and disrupt the spread of child pornography. Following this the PhotoDNA was installed on Bing, OneDrive and Outlook.com services, and later on Facebook and Gmail.

How does PhotoDNA work?

PhotoDNA is an image matching technology that helps identify images that could contain actions of child abuse and pornographic in nature. "It creates a unique signature for a digital image, something like a fingerprint, which can be compared with the signatures of other images to find copies of that image," Microsoft explains on their blog.

"When child pornography images are shared and viewed amongst predators online, it is not simply the distribution of objectionable content – it is community rape of a child. These crimes turn a single horrific moment of sexual abuse of a child into an unending series of violations of that child. We simply cannot allow people to continue trading these horrifying images online when we have the technology to help do something about it," they share on their blog.

How effective is it?

In 2011 alone, PhotoDNA has successfully evaluated more than two billion images leading to identification of more than 1,000 matches on SkyDrive and 1,500 matches through Bing’s image search indexing.

However, Google's Chief Legal Officer David Drummond, pointed out in the Telegraph, "While computers can detect the colour of naked flesh, only humans can effectively differentiate between innocent pictures of children and images of abuse. And even humans don’t get it 100% right," he wrote.

But it works, Google confirms. "Since 2008, we have used "hashing" technology to tag known child sexual abuse images, allowing us to identify duplicate images which may exist elsewhere. Each offending image in effect gets a unique fingerprint that our computers can recognise without humans having to view them again," Drummond writes. (By hashing, he means PhotoDNA. They don't use the actual word. It must be competitor thing)

Available freely

Microsoft has donate the technology since then, making PhotoDNA available to law enforcement globally at no charge via NetClean.

So, while it is a little relieving to know that it is not big corporations scanning our emails but their futuristic technologies doing that instead, we can take comfort in the fact that it is at least for a good cause. "We’re in the business of making information widely available, but there’s certain 'information' that should never be created or found," explains Google. "We can do a lot to ensure it’s not available online—and that when people try to share this disgusting content they are caught and prosecuted."

You are right, dear Google. But question remains, can you trust this technology to do the right thing?

![submenu-img]() BMW i5 M60 xDrive launched in India, all-electric sedan priced at Rs 11950000

BMW i5 M60 xDrive launched in India, all-electric sedan priced at Rs 11950000![submenu-img]() This superstar was arrested several times by age 17, thrown out of home, once had just Rs 250, now worth Rs 6600 crore

This superstar was arrested several times by age 17, thrown out of home, once had just Rs 250, now worth Rs 6600 crore![submenu-img]() Meet Reliance’s highest paid employee, gets over Rs 240000000 salary, he is Mukesh Ambani’s…

Meet Reliance’s highest paid employee, gets over Rs 240000000 salary, he is Mukesh Ambani’s… ![submenu-img]() Meet lesser-known relative of Mukesh Ambani, Anil Ambani, has worked with BCCI, he is married to...

Meet lesser-known relative of Mukesh Ambani, Anil Ambani, has worked with BCCI, he is married to...![submenu-img]() Made in just Rs 95,000, this film was a superhit, but destroyed lead actress' career, saw controversy over bold scenes

Made in just Rs 95,000, this film was a superhit, but destroyed lead actress' career, saw controversy over bold scenes![submenu-img]() DNA Verified: Is CAA an anti-Muslim law? Centre terms news report as 'misleading'

DNA Verified: Is CAA an anti-Muslim law? Centre terms news report as 'misleading'![submenu-img]() DNA Verified: Lok Sabha Elections 2024 to be held on April 19? Know truth behind viral message

DNA Verified: Lok Sabha Elections 2024 to be held on April 19? Know truth behind viral message![submenu-img]() DNA Verified: Modi govt giving students free laptops under 'One Student One Laptop' scheme? Know truth here

DNA Verified: Modi govt giving students free laptops under 'One Student One Laptop' scheme? Know truth here![submenu-img]() DNA Verified: Shah Rukh Khan denies reports of his role in release of India's naval officers from Qatar

DNA Verified: Shah Rukh Khan denies reports of his role in release of India's naval officers from Qatar![submenu-img]() DNA Verified: Is govt providing Rs 1.6 lakh benefit to girls under PM Ladli Laxmi Yojana? Know truth

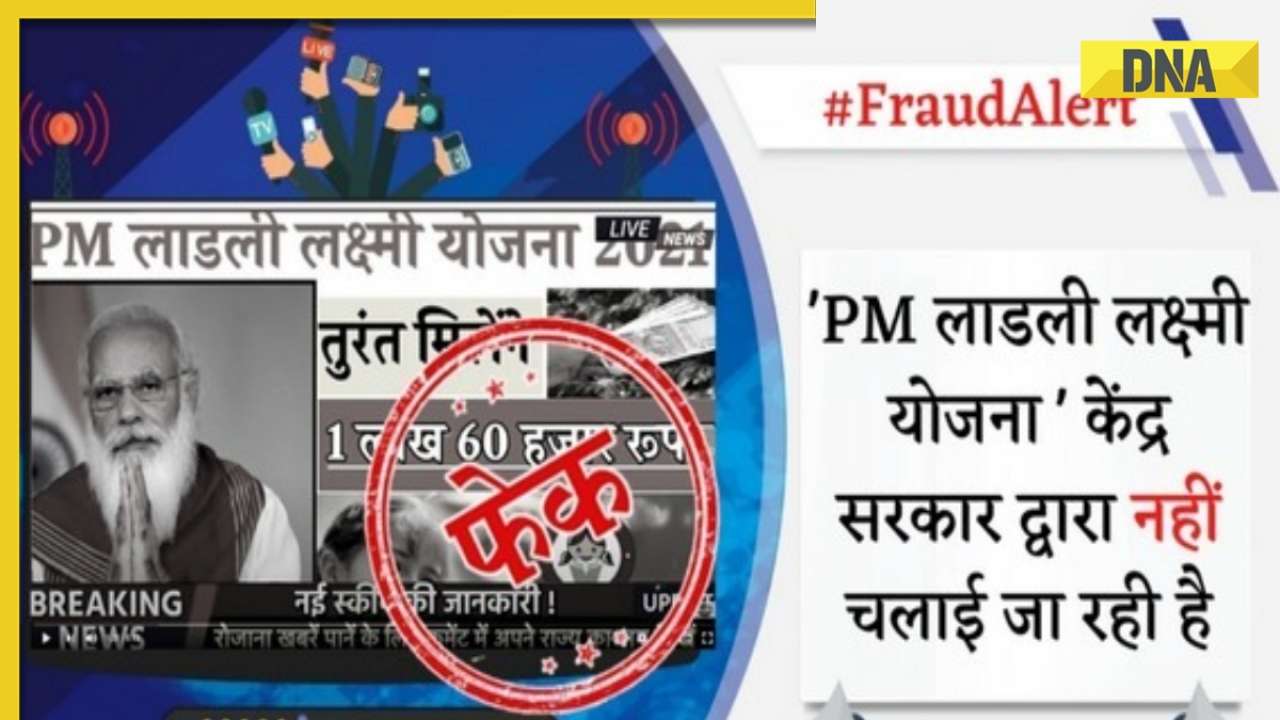

DNA Verified: Is govt providing Rs 1.6 lakh benefit to girls under PM Ladli Laxmi Yojana? Know truth![submenu-img]() In pics: Arti Singh stuns in red lehenga as she ties the knot with beau Dipak Chauhan in dreamy wedding

In pics: Arti Singh stuns in red lehenga as she ties the knot with beau Dipak Chauhan in dreamy wedding![submenu-img]() Actors who died due to cosmetic surgeries

Actors who died due to cosmetic surgeries![submenu-img]() See inside pics: Malayalam star Aparna Das' dreamy wedding with Manjummel Boys actor Deepak Parambol

See inside pics: Malayalam star Aparna Das' dreamy wedding with Manjummel Boys actor Deepak Parambol ![submenu-img]() In pics: Salman Khan, Alia Bhatt, Rekha, Neetu Kapoor attend grand premiere of Sanjay Leela Bhansali's Heeramandi

In pics: Salman Khan, Alia Bhatt, Rekha, Neetu Kapoor attend grand premiere of Sanjay Leela Bhansali's Heeramandi![submenu-img]() Streaming This Week: Crakk, Tillu Square, Ranneeti, Dil Dosti Dilemma, latest OTT releases to binge-watch

Streaming This Week: Crakk, Tillu Square, Ranneeti, Dil Dosti Dilemma, latest OTT releases to binge-watch![submenu-img]() What is inheritance tax?

What is inheritance tax?![submenu-img]() DNA Explainer: What is cloud seeding which is blamed for wreaking havoc in Dubai?

DNA Explainer: What is cloud seeding which is blamed for wreaking havoc in Dubai?![submenu-img]() DNA Explainer: What is Israel's Arrow-3 defence system used to intercept Iran's missile attack?

DNA Explainer: What is Israel's Arrow-3 defence system used to intercept Iran's missile attack?![submenu-img]() DNA Explainer: How Iranian projectiles failed to breach iron-clad Israeli air defence

DNA Explainer: How Iranian projectiles failed to breach iron-clad Israeli air defence![submenu-img]() DNA Explainer: What is India's stand amid Iran-Israel conflict?

DNA Explainer: What is India's stand amid Iran-Israel conflict?![submenu-img]() This superstar was arrested several times by age 17, thrown out of home, once had just Rs 250, now worth Rs 6600 crore

This superstar was arrested several times by age 17, thrown out of home, once had just Rs 250, now worth Rs 6600 crore![submenu-img]() Made in just Rs 95,000, this film was a superhit, but destroyed lead actress' career, saw controversy over bold scenes

Made in just Rs 95,000, this film was a superhit, but destroyed lead actress' career, saw controversy over bold scenes![submenu-img]() Meet 72-year-old who earns Rs 280 cr per film, Asia's highest-paid actor, bigger than Shah Rukh, Salman, Akshay, Prabhas

Meet 72-year-old who earns Rs 280 cr per film, Asia's highest-paid actor, bigger than Shah Rukh, Salman, Akshay, Prabhas![submenu-img]() This star, who once lived in chawl, worked as tailor, later gave four Rs 200-crore films; he's now worth...

This star, who once lived in chawl, worked as tailor, later gave four Rs 200-crore films; he's now worth...![submenu-img]() Tamil star Prasanna reveals why he chose series Ranneeti for Hindi debut: 'Getting into Bollywood is not...'

Tamil star Prasanna reveals why he chose series Ranneeti for Hindi debut: 'Getting into Bollywood is not...'![submenu-img]() IPL 2024: Virat Kohli, Rajat Patidar fifties and disciplined bowling help RCB beat Sunrisers Hyderabad by 35 runs

IPL 2024: Virat Kohli, Rajat Patidar fifties and disciplined bowling help RCB beat Sunrisers Hyderabad by 35 runs![submenu-img]() 'This is the problem in India...': Wasim Akram's blunt take on fans booing Mumbai Indians skipper Hardik Pandya

'This is the problem in India...': Wasim Akram's blunt take on fans booing Mumbai Indians skipper Hardik Pandya![submenu-img]() KKR vs PBKS, IPL 2024: Predicted playing XI, live streaming details, weather and pitch report

KKR vs PBKS, IPL 2024: Predicted playing XI, live streaming details, weather and pitch report![submenu-img]() KKR vs PBKS IPL 2024 Dream11 prediction: Fantasy cricket tips for Kolkata Knight Riders vs Punjab Kings

KKR vs PBKS IPL 2024 Dream11 prediction: Fantasy cricket tips for Kolkata Knight Riders vs Punjab Kings![submenu-img]() IPL 2024: KKR star Rinku Singh finally gets another bat from Virat Kohli after breaking previous one - Watch

IPL 2024: KKR star Rinku Singh finally gets another bat from Virat Kohli after breaking previous one - Watch![submenu-img]() Viral video: Teacher's cute way to capture happy student faces melts internet, watch

Viral video: Teacher's cute way to capture happy student faces melts internet, watch![submenu-img]() Woman attends online meeting on scooter while stuck in traffic, video goes viral

Woman attends online meeting on scooter while stuck in traffic, video goes viral![submenu-img]() Viral video: Pilot proposes to flight attendant girlfriend before takeoff, internet hearts it

Viral video: Pilot proposes to flight attendant girlfriend before takeoff, internet hearts it![submenu-img]() Pakistani teen receives life-saving heart transplant from Indian donor, details here

Pakistani teen receives life-saving heart transplant from Indian donor, details here![submenu-img]() Viral video: Truck driver's innovative solution to beat the heat impresses internet, watch

Viral video: Truck driver's innovative solution to beat the heat impresses internet, watch

)

)

)

)

)

)

)