That’s the opening paragraph from Nassim Nicholas Taleb’s book The Black Swan - The Impact of the Highly Improbable.

MUMBAI: “Before the discovery of Australia, people in the Old World were convinced that all swans were white, an unassailable belief as it seemed completely confirmed by empirical evidence. The sighting of the first black swan might have been an interesting surprise for a few ornithologists (and others extremely concerned with the colouring of birds), but that is not where the significance of the story lies. It illustrates a severe limitation to our learning from observations or experience and the fragility of our knowledge. Our single observation can invalidate a general statement derived from millennia of confirmatory sightings of millions of white swans. All you need is one (and I am told, quite ugly) black bird.”

That’s the opening paragraph from Nassim Nicholas Taleb’s book The Black Swan - The Impact of the Highly Improbable. “The central idea of this book concerns our blindness with respect to randomness, particularly the large deviations” the author writes.

It is these large deviations from the normal that Taleb calls the “black swans.” Take the attack on the twin buildings of the World Trade Centre in New York on September 11, 2001. Or the war in the author’s native country, Lebanon, which people felt would end in a matter of days, but which went on for seventeen years.

And what makes these black swans particularly dangerous is that most of the times, they are unexpected. “Consider the turkey that is fed every day. Every single feeding will firm up the bird’s belief that it is the general rule of life to be fed every day by friendly members of the human race “looking out for its best interests,” as a politician would say. On the afternoon of the Wednesday before Thanksgiving, something unexpected will happen to the turkey. It will incur a revision of belief.”

Given this, “what we don’t know” becomes more important than “what we know.” However, this does not stop individuals from coming up with explanations for everything, even though they are unexplainable at most times. As Taleb writes, “as I formulated my ideas on the perception of random events, I developed the governing impression that our minds are wonderful explanation machines, capable of making sense out of almost anything, capable of mounting explanations for all manner of phenomena, and generally incapable of accepting the idea of unpredictability. These events were unexplainable, but intelligent people thought they were capable of providing convincing explanations for them - after the fact. Furthermore, the more intelligent the person, the better sounding the explanation.”

Taleb provides an interesting example on explanations using two Bloomberg News headlines that appeared within half an hour of one another in December 2003 on the day when Saddam Hussein was captured.

“Bloomberg News flashed the following headline at 13:01: US Treasuries Rise; Hussein Capture May Not Curb Terrorism.”

“At 13.31 they issued the next bulletin: US Treasuries Fall; Hussein Capture Boosts Allure of Risky Assets.”

As Taleb writes, “It happens all the time: a cause is proposed to make you swallow the news and make news more concrete.”

Now, that doesn’t mean that things happened because of the reasons being offered. “The problem of overcausation does not lie with the journalist, but with the public. Nobody would pay one dollar to buy a series of abstract statistics reminiscent of a boring college lecture. We want to be told stories, and there is nothing wrong with that - expect that we should check more thoroughly whether the story provides consequential distortions of reality… Just consider that newspapers try to get impeccable facts, but weave them into a narrative in such a way as to convey the impression of causality (and knowledge). There are fact checkers, not intellect-checkers. Alas.”

As far as explanations are concerned, the jury is still out on why the Dow Jones Industrial Index crashed on October 19, 1987. And once it had crashed every year, the traders expected the markets to crash in October 1987. “After the stock market crash of 1987, half of America’s traders braced for another one every October - not taking into account that there was no antecedent to the first one. We worry too late - ex post. Mistaking a naïve observation of the past as something definitive or representative is the one and only cause of our inability to understand the Black Swan,” writes Taleb.

This ex-post reasoning affects those who work in professions having high randomness. “People in professions of high randomness (such as in the markets) can suffer more than their share of toxic effect of look-back settings. I should have sold my portfolio at the top; I could have bought that stock years ago for pennies and I would now be driving a pink convertible; etcetera.”

The way out of this constant worry is to keep a daily diary. “If you work in a randomness-laden profession, as we see, you are likely to suffer burnout effects from that constant second-guessing of your past actions in terms of what played out subsequently. Keeping a diary is the least you can do in these circumstances.”

Given the fact that we worry about “black swans” too much after they have occurred, we are not prepared for them when they happen. And one such pedigree is that of bankers. As Taleb writes, “In the summer of 1982, large American banks lost close to all their past earnings (cumulatively), about everything that they ever made in the history of American banking - everything. They had been lending to South and Central American countries that all defaulted at the same time - “an event of an exceptional nature.” So it took just one summer to figure out that this was a sucker’s business and that all their earnings came from a very risky game. All that while the bankers led everyone especially themselves into believing that they were “conservative.” ...They are not conservative; just phenomenally skilled at self-deception by burying the possibility of a large, devastating loss under the rug.” The same thing can be written as, “From the standpoint of the turkey, the non-feeding of the one thousand and first day is a Black Swan. For the butcher, it is not, since its occurrence is not unexpected. So you can see here that the Black Swan is a sucker’s problem.”

Is there a way out of these ‘black swan’ events? As Taleb writes, “The probabilities of rare events are not computable; the effect of an event on us is considerably easier to ascertain (the rarer the event, the fuzzier the odds). We can have a clear idea of the consequences of an event even if we do not know how likely it is to occur. I don’t know the odds of an earthquake, but I can imagine how San Francisco might be affected by one. This idea that in order to make a decision you need to focus on the consequences (which you can know) rather than the probability (which you can’t know) is the central idea of uncertainty… All you have to do is mitigate the consequences. As I said, if my portfolio is exposed to the market crash, the odds of which I cannot compute, all I have to do is buy insurance, or get out and invest the amounts I am not willing to ever lose in the less risky securities.”

![submenu-img]() BMW i5 M60 xDrive launched in India, all-electric sedan priced at Rs 11950000

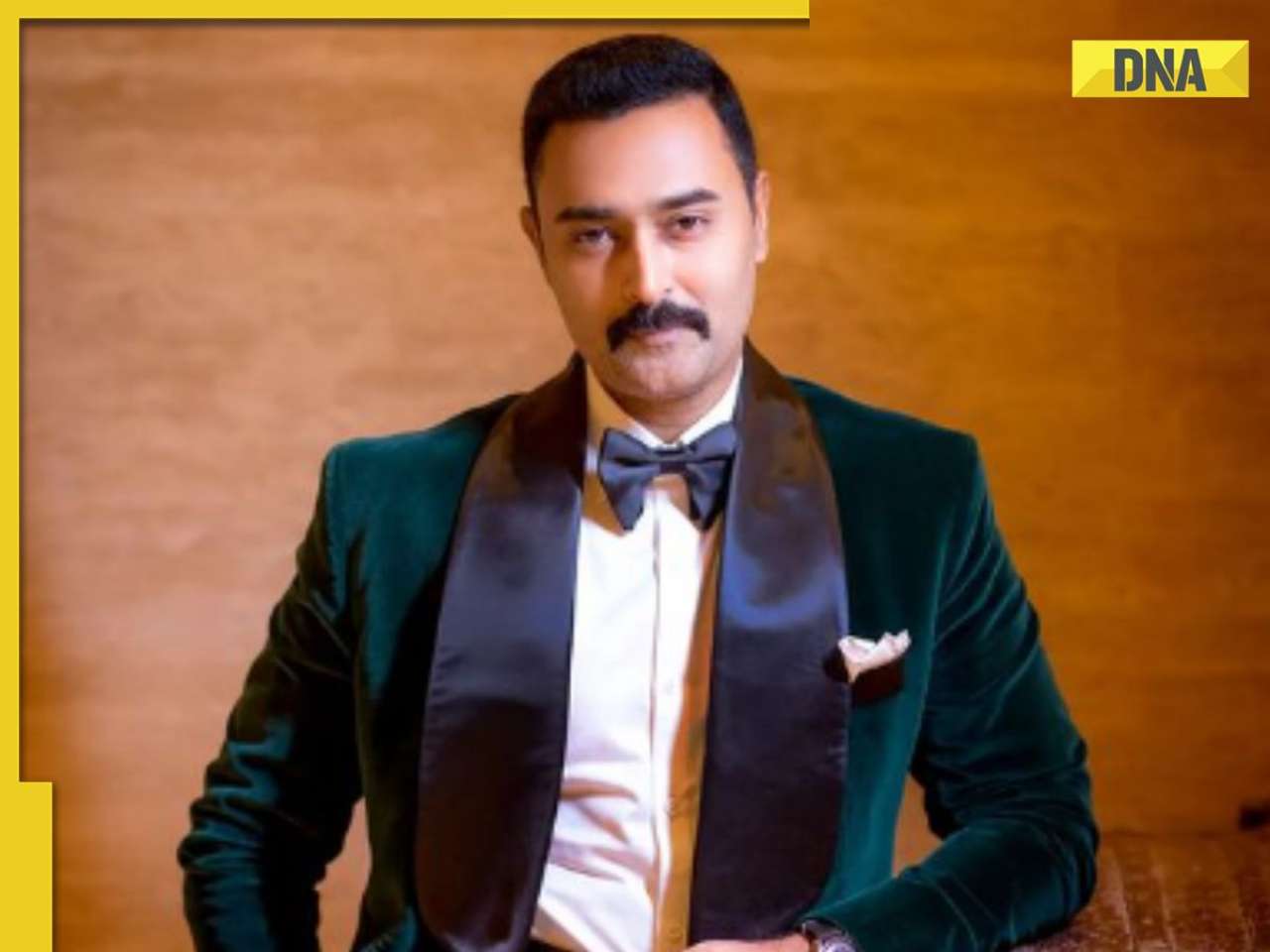

BMW i5 M60 xDrive launched in India, all-electric sedan priced at Rs 11950000![submenu-img]() This superstar was arrested several times by age 17, thrown out of home, once had just Rs 250, now worth Rs 6600 crore

This superstar was arrested several times by age 17, thrown out of home, once had just Rs 250, now worth Rs 6600 crore![submenu-img]() Meet Reliance’s highest paid employee, gets over Rs 240000000 salary, he is Mukesh Ambani’s…

Meet Reliance’s highest paid employee, gets over Rs 240000000 salary, he is Mukesh Ambani’s… ![submenu-img]() Meet lesser-known relative of Mukesh Ambani, Anil Ambani, has worked with BCCI, he is married to...

Meet lesser-known relative of Mukesh Ambani, Anil Ambani, has worked with BCCI, he is married to...![submenu-img]() Made in just Rs 95,000, this film was a superhit, but destroyed lead actress' career, saw controversy over bold scenes

Made in just Rs 95,000, this film was a superhit, but destroyed lead actress' career, saw controversy over bold scenes![submenu-img]() DNA Verified: Is CAA an anti-Muslim law? Centre terms news report as 'misleading'

DNA Verified: Is CAA an anti-Muslim law? Centre terms news report as 'misleading'![submenu-img]() DNA Verified: Lok Sabha Elections 2024 to be held on April 19? Know truth behind viral message

DNA Verified: Lok Sabha Elections 2024 to be held on April 19? Know truth behind viral message![submenu-img]() DNA Verified: Modi govt giving students free laptops under 'One Student One Laptop' scheme? Know truth here

DNA Verified: Modi govt giving students free laptops under 'One Student One Laptop' scheme? Know truth here![submenu-img]() DNA Verified: Shah Rukh Khan denies reports of his role in release of India's naval officers from Qatar

DNA Verified: Shah Rukh Khan denies reports of his role in release of India's naval officers from Qatar![submenu-img]() DNA Verified: Is govt providing Rs 1.6 lakh benefit to girls under PM Ladli Laxmi Yojana? Know truth

DNA Verified: Is govt providing Rs 1.6 lakh benefit to girls under PM Ladli Laxmi Yojana? Know truth![submenu-img]() In pics: Arti Singh stuns in red lehenga as she ties the knot with beau Dipak Chauhan in dreamy wedding

In pics: Arti Singh stuns in red lehenga as she ties the knot with beau Dipak Chauhan in dreamy wedding![submenu-img]() Actors who died due to cosmetic surgeries

Actors who died due to cosmetic surgeries![submenu-img]() See inside pics: Malayalam star Aparna Das' dreamy wedding with Manjummel Boys actor Deepak Parambol

See inside pics: Malayalam star Aparna Das' dreamy wedding with Manjummel Boys actor Deepak Parambol ![submenu-img]() In pics: Salman Khan, Alia Bhatt, Rekha, Neetu Kapoor attend grand premiere of Sanjay Leela Bhansali's Heeramandi

In pics: Salman Khan, Alia Bhatt, Rekha, Neetu Kapoor attend grand premiere of Sanjay Leela Bhansali's Heeramandi![submenu-img]() Streaming This Week: Crakk, Tillu Square, Ranneeti, Dil Dosti Dilemma, latest OTT releases to binge-watch

Streaming This Week: Crakk, Tillu Square, Ranneeti, Dil Dosti Dilemma, latest OTT releases to binge-watch![submenu-img]() What is inheritance tax?

What is inheritance tax?![submenu-img]() DNA Explainer: What is cloud seeding which is blamed for wreaking havoc in Dubai?

DNA Explainer: What is cloud seeding which is blamed for wreaking havoc in Dubai?![submenu-img]() DNA Explainer: What is Israel's Arrow-3 defence system used to intercept Iran's missile attack?

DNA Explainer: What is Israel's Arrow-3 defence system used to intercept Iran's missile attack?![submenu-img]() DNA Explainer: How Iranian projectiles failed to breach iron-clad Israeli air defence

DNA Explainer: How Iranian projectiles failed to breach iron-clad Israeli air defence![submenu-img]() DNA Explainer: What is India's stand amid Iran-Israel conflict?

DNA Explainer: What is India's stand amid Iran-Israel conflict?![submenu-img]() This superstar was arrested several times by age 17, thrown out of home, once had just Rs 250, now worth Rs 6600 crore

This superstar was arrested several times by age 17, thrown out of home, once had just Rs 250, now worth Rs 6600 crore![submenu-img]() Made in just Rs 95,000, this film was a superhit, but destroyed lead actress' career, saw controversy over bold scenes

Made in just Rs 95,000, this film was a superhit, but destroyed lead actress' career, saw controversy over bold scenes![submenu-img]() Meet 72-year-old who earns Rs 280 cr per film, Asia's highest-paid actor, bigger than Shah Rukh, Salman, Akshay, Prabhas

Meet 72-year-old who earns Rs 280 cr per film, Asia's highest-paid actor, bigger than Shah Rukh, Salman, Akshay, Prabhas![submenu-img]() This star, who once lived in chawl, worked as tailor, later gave four Rs 200-crore films; he's now worth...

This star, who once lived in chawl, worked as tailor, later gave four Rs 200-crore films; he's now worth...![submenu-img]() Tamil star Prasanna reveals why he chose series Ranneeti for Hindi debut: 'Getting into Bollywood is not...'

Tamil star Prasanna reveals why he chose series Ranneeti for Hindi debut: 'Getting into Bollywood is not...'![submenu-img]() IPL 2024: Virat Kohli, Rajat Patidar fifties and disciplined bowling help RCB beat Sunrisers Hyderabad by 35 runs

IPL 2024: Virat Kohli, Rajat Patidar fifties and disciplined bowling help RCB beat Sunrisers Hyderabad by 35 runs![submenu-img]() 'This is the problem in India...': Wasim Akram's blunt take on fans booing Mumbai Indians skipper Hardik Pandya

'This is the problem in India...': Wasim Akram's blunt take on fans booing Mumbai Indians skipper Hardik Pandya![submenu-img]() KKR vs PBKS, IPL 2024: Predicted playing XI, live streaming details, weather and pitch report

KKR vs PBKS, IPL 2024: Predicted playing XI, live streaming details, weather and pitch report![submenu-img]() KKR vs PBKS IPL 2024 Dream11 prediction: Fantasy cricket tips for Kolkata Knight Riders vs Punjab Kings

KKR vs PBKS IPL 2024 Dream11 prediction: Fantasy cricket tips for Kolkata Knight Riders vs Punjab Kings![submenu-img]() IPL 2024: KKR star Rinku Singh finally gets another bat from Virat Kohli after breaking previous one - Watch

IPL 2024: KKR star Rinku Singh finally gets another bat from Virat Kohli after breaking previous one - Watch![submenu-img]() Viral video: Teacher's cute way to capture happy student faces melts internet, watch

Viral video: Teacher's cute way to capture happy student faces melts internet, watch![submenu-img]() Woman attends online meeting on scooter while stuck in traffic, video goes viral

Woman attends online meeting on scooter while stuck in traffic, video goes viral![submenu-img]() Viral video: Pilot proposes to flight attendant girlfriend before takeoff, internet hearts it

Viral video: Pilot proposes to flight attendant girlfriend before takeoff, internet hearts it![submenu-img]() Pakistani teen receives life-saving heart transplant from Indian donor, details here

Pakistani teen receives life-saving heart transplant from Indian donor, details here![submenu-img]() Viral video: Truck driver's innovative solution to beat the heat impresses internet, watch

Viral video: Truck driver's innovative solution to beat the heat impresses internet, watch

)

)

)

)

)

)