Deepfake technology manipulates real photos or videos with the help of Artificial Intelligence, turning them into fake photos and videos.

In today's show, we will tell you about a threat, which can pose a bigger challenge to the world than terrorism, and this threat is from the Deepfake video - a technique that can be used to discredit anyone by making a fake video in a few hours.

To understand this, first, you will have to watch some fake videos, which are quite viral on social media. Some people are enjoying these fake videos, but there are many people who are worried. And to understand what these people are worried about, you have to watch these videos first.

This is Deepfake technology, which manipulates real photos or videos with the help of Artificial Intelligence, turning them into fake photos and videos for a specific purpose. And all this is done so cleanly that it becomes difficult to distinguish between real and fake pictures and videos. This is why it is called the Deepfake technique.

It can happen to big celebrities, it can happen to the prime minister and ministers of any country and it can happen to big industrialists and common people too. Think how dangerous this technique is.

Such Deepfake videos are often spread with the help of social media and are at the forefront of Facebook but Facebook founder Mark Zuckerberg himself has fallen prey to Deepfake.

Some time ago, one of his fake videos went viral and even then it was difficult for people to understand whether this video is real or fake. Not only this, this has also happened with former US President Barack Obama.

Now you must have understood why we are saying that this technology can become a bigger challenge for the world than terrorism. To understand this, now you have to look at some figures.

Every day, 180 million photos are uploaded on social media all over the world, i.e. the number of photos uploaded on the internet in a week is equal to the population of the world at this time.

Crores of these pictures are in the form of selfies and the number of pictures of women is much more than that of men. If you are a woman, today, you may have posted a picture of yourself on social media, but do you have any idea what cybercriminals can do with your pictures?

With the help of Deepfake Technology, these pictures can be easily converted into pornographic images. And you can understand this through a study done in 2020.

This study was done by a company that found fake content on the Internet, according to which, photos of one lakh women put on social media have been converted into pornographic images with the help of this technique.

How Deepfake technology works

With the help of Artificial Intelligence (AI), some facial changes are made to create a fake video. And many times, the voice is copied from the original video and put into a fake video. And this kind of video feels like real video. You can also call such a video morphed video, doctored video, or synthetic media.

How you can avoid Deepfake videos

Videos are easier to identify than Deepfake photos. And you can do this with the help of two things. For example, when a Deepfake video of a person is made, there is not much difference between that person and the background. But if you recognize that the focus is only on the face in the video and the background is deliberately hidden then you can identify the fake video.

The second way is to develop such cameras in future, which will take pictures and convert them into a kind of digital signature and make them encrypted. Due to this, criminals will not be able to use them easily.

An easy way to protect yourself from Deepfake is to share a minimum number of photos on social media and avoid putting close-up pictures of your face as much as possible.

The fourth way is to develop Artificial Intelligence which recognizes Deepfake quickly and ensures its reach to the common people so that people can avoid falling prey to fake news and fake videos.

Most of the photographs which are easily tampered with are Selfies.

In Selfies, the face is clearly visible and generally, its resolution is also good, so it becomes easy to associate a face taken as a selfie with a pornographic photo. It is very dangerous to put a close-up of your face on social media.

Apart from this, if you are fond of taking selfies from different angles, then you should also abandon your habit because these different angles of your face make the task of Artificial Intelligence preparing Deepfake very easy. With this, videos can be produced which are very difficult to believe to be fake, because it contains almost all the angles of your face.

According to a report in the year 2019, 14,000 such videos were identified on social media, which were produced with the help of Deepfake software. And the important thing is that 96% of these videos were related to pornography.

Why is the term Deep Fake used for such videos

In the year 2017, there was a user named Deep Fake on a website named Reddit and this user then started using facial change technique for pornography. It was from here that the word Deepfake came to the world and such videos came to be called Deepfake Videos.

However, there is another side to this technology and if it is used properly, with the help of this, many changes can be brought.

For the past few days, a software named MyHeritage is in news. With the help of this software, you can convert any picture to a 10-second video. With this software, you can add life to old photographs too.

Pictures of Shaheed Bhagat Singh, Swami Vivekananda, Lok Manya Tilak, famous Hindi writer Munshi Premchand, Lal Bahadur Shastri and famous French ruler Napoleon Bonaparte have been converted into videos with the help of this software. And these videos are enough to tell that this technique can be useful for us if Artificial Intelligence is used in the right direction.

![submenu-img]() Weather update: IMD predicts light to moderate rain, thunderstorms in Delhi-NCR; check forecast here

Weather update: IMD predicts light to moderate rain, thunderstorms in Delhi-NCR; check forecast here![submenu-img]() 'I can't breathe': Black man in Ohio pleads as police officers pin him to floor, then...

'I can't breathe': Black man in Ohio pleads as police officers pin him to floor, then...![submenu-img]() Delhi HC raps CM Kejriwal, accuses him of prioritising political interest by continuing as CM after arrest

Delhi HC raps CM Kejriwal, accuses him of prioritising political interest by continuing as CM after arrest![submenu-img]() Manipur: Two CRPF personnel killed in Kuki militants' attack in Naransena area

Manipur: Two CRPF personnel killed in Kuki militants' attack in Naransena area![submenu-img]() These 9 Indian dishes make it to the list of ‘best stews in the world’

These 9 Indian dishes make it to the list of ‘best stews in the world’![submenu-img]() DNA Verified: Is CAA an anti-Muslim law? Centre terms news report as 'misleading'

DNA Verified: Is CAA an anti-Muslim law? Centre terms news report as 'misleading'![submenu-img]() DNA Verified: Lok Sabha Elections 2024 to be held on April 19? Know truth behind viral message

DNA Verified: Lok Sabha Elections 2024 to be held on April 19? Know truth behind viral message![submenu-img]() DNA Verified: Modi govt giving students free laptops under 'One Student One Laptop' scheme? Know truth here

DNA Verified: Modi govt giving students free laptops under 'One Student One Laptop' scheme? Know truth here![submenu-img]() DNA Verified: Shah Rukh Khan denies reports of his role in release of India's naval officers from Qatar

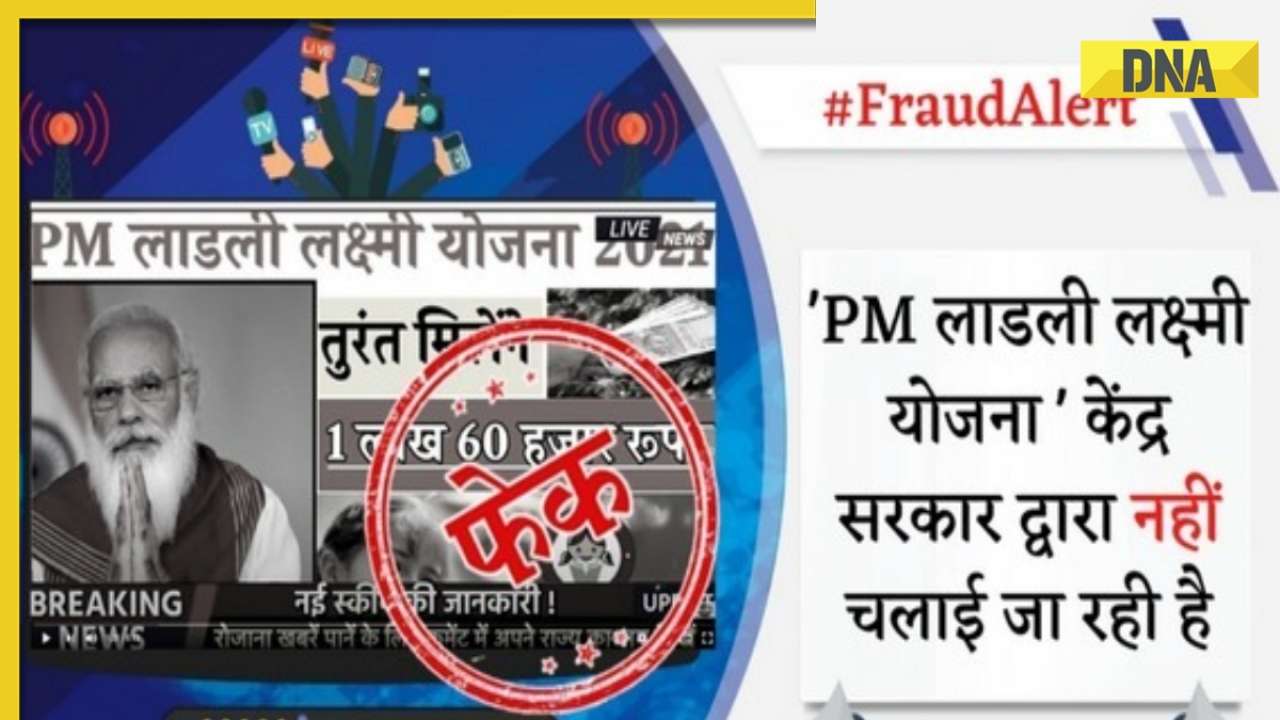

DNA Verified: Shah Rukh Khan denies reports of his role in release of India's naval officers from Qatar![submenu-img]() DNA Verified: Is govt providing Rs 1.6 lakh benefit to girls under PM Ladli Laxmi Yojana? Know truth

DNA Verified: Is govt providing Rs 1.6 lakh benefit to girls under PM Ladli Laxmi Yojana? Know truth![submenu-img]() In pics: Arti Singh stuns in red lehenga as she ties the knot with beau Dipak Chauhan in dreamy wedding

In pics: Arti Singh stuns in red lehenga as she ties the knot with beau Dipak Chauhan in dreamy wedding![submenu-img]() Actors who died due to cosmetic surgeries

Actors who died due to cosmetic surgeries![submenu-img]() See inside pics: Malayalam star Aparna Das' dreamy wedding with Manjummel Boys actor Deepak Parambol

See inside pics: Malayalam star Aparna Das' dreamy wedding with Manjummel Boys actor Deepak Parambol ![submenu-img]() In pics: Salman Khan, Alia Bhatt, Rekha, Neetu Kapoor attend grand premiere of Sanjay Leela Bhansali's Heeramandi

In pics: Salman Khan, Alia Bhatt, Rekha, Neetu Kapoor attend grand premiere of Sanjay Leela Bhansali's Heeramandi![submenu-img]() Streaming This Week: Crakk, Tillu Square, Ranneeti, Dil Dosti Dilemma, latest OTT releases to binge-watch

Streaming This Week: Crakk, Tillu Square, Ranneeti, Dil Dosti Dilemma, latest OTT releases to binge-watch![submenu-img]() DNA Explainer: Why Harvey Weinstein's rape conviction was overturned, will beleaguered Hollywood mogul get out of jail?

DNA Explainer: Why Harvey Weinstein's rape conviction was overturned, will beleaguered Hollywood mogul get out of jail?![submenu-img]() What is inheritance tax?

What is inheritance tax?![submenu-img]() DNA Explainer: What is cloud seeding which is blamed for wreaking havoc in Dubai?

DNA Explainer: What is cloud seeding which is blamed for wreaking havoc in Dubai?![submenu-img]() DNA Explainer: What is Israel's Arrow-3 defence system used to intercept Iran's missile attack?

DNA Explainer: What is Israel's Arrow-3 defence system used to intercept Iran's missile attack?![submenu-img]() DNA Explainer: How Iranian projectiles failed to breach iron-clad Israeli air defence

DNA Explainer: How Iranian projectiles failed to breach iron-clad Israeli air defence![submenu-img]() Krishna Mukherjee accuses Shubh Shagun producer of harassing, threatening her: ‘I was changing clothes when...'

Krishna Mukherjee accuses Shubh Shagun producer of harassing, threatening her: ‘I was changing clothes when...'![submenu-img]() Meet 90s top Bollywood actress, who gave hits with Shah Rukh, Salman, Aamir, one mistake ended career; has now become…

Meet 90s top Bollywood actress, who gave hits with Shah Rukh, Salman, Aamir, one mistake ended career; has now become…![submenu-img]() This actress, who once worked as pre-school teacher, changed diapers, later gave six Rs 100-crore films; is now worth…

This actress, who once worked as pre-school teacher, changed diapers, later gave six Rs 100-crore films; is now worth…![submenu-img]() 'There were days when I didn't want to probably live': Adhyayan Suman opens up on rough patch in his career

'There were days when I didn't want to probably live': Adhyayan Suman opens up on rough patch in his career![submenu-img]() This low-budget film with no star is 2024's highest-grossing Indian film; beat Fighter, Shaitaan, Bade Miyan Chote Miyan

This low-budget film with no star is 2024's highest-grossing Indian film; beat Fighter, Shaitaan, Bade Miyan Chote Miyan![submenu-img]() World wrestling body threatens to reimpose ban on WFI if...

World wrestling body threatens to reimpose ban on WFI if...![submenu-img]() IPL 2024: Jonny Bairstow, Shashank Singh special power Punjab Kings to record run-chase against KKR

IPL 2024: Jonny Bairstow, Shashank Singh special power Punjab Kings to record run-chase against KKR![submenu-img]() DC vs MI, IPL 2024: Predicted playing XI, live streaming details, weather and pitch report

DC vs MI, IPL 2024: Predicted playing XI, live streaming details, weather and pitch report![submenu-img]() DC vs MI IPL 2024 Dream11 prediction: Fantasy cricket tips for Delhi Capitals vs Mumbai Indians

DC vs MI IPL 2024 Dream11 prediction: Fantasy cricket tips for Delhi Capitals vs Mumbai Indians![submenu-img]() Yuvraj Singh named ICC Men's T20 World Cup 2024 Ambassador

Yuvraj Singh named ICC Men's T20 World Cup 2024 Ambassador![submenu-img]() Watch: Lioness teaches cubs to climb tree, adorable video goes viral

Watch: Lioness teaches cubs to climb tree, adorable video goes viral![submenu-img]() Viral video: Little girl's impressive lion roar wins hearts on internet, watch

Viral video: Little girl's impressive lion roar wins hearts on internet, watch![submenu-img]() Who is Sangeet Singh? Man arrested for posing as Singapore Airlines pilot at Delhi airport

Who is Sangeet Singh? Man arrested for posing as Singapore Airlines pilot at Delhi airport![submenu-img]() One of India’s most expensive wedding, attended by 5000 people, 100 room villa, cost Rs…

One of India’s most expensive wedding, attended by 5000 people, 100 room villa, cost Rs…![submenu-img]() Viral video: Delhi's 'Spiderman' take to streets on bike, get arrested; watch

Viral video: Delhi's 'Spiderman' take to streets on bike, get arrested; watch

)

)

)

)

)

)

)