- Home

- Latest News

![submenu-img]() Aishwarya blushes singing 'Meri Saason Mein Basa Hai' in presence of Salman, old video goes viral amid divorce rumours

Aishwarya blushes singing 'Meri Saason Mein Basa Hai' in presence of Salman, old video goes viral amid divorce rumours![submenu-img]() IND vs SL, 1st T20I: Predicted playing XIs, live streaming details, weather and pitch report

IND vs SL, 1st T20I: Predicted playing XIs, live streaming details, weather and pitch report![submenu-img]() Women's Asia Cup 2024: India beat Bangladesh by 10 wickets to reach final for 8th straight time

Women's Asia Cup 2024: India beat Bangladesh by 10 wickets to reach final for 8th straight time![submenu-img]() Apple reduces prices of iPhones across models, iPhones 13, 14 and 15 will be cheaper by Rs...

Apple reduces prices of iPhones across models, iPhones 13, 14 and 15 will be cheaper by Rs...![submenu-img]() 'Elon Musk treated me badly for...,' says Tesla chief's daughter Vivian Jenna Wilson

'Elon Musk treated me badly for...,' says Tesla chief's daughter Vivian Jenna Wilson

- Webstory

- DNA Hindi

- Education

![submenu-img]() Meet woman, a doctor who cleared UPSC exam to become IAS officer, resigned after 7 years due to...

Meet woman, a doctor who cleared UPSC exam to become IAS officer, resigned after 7 years due to...![submenu-img]() Meet IAS officer, one of India's most educated men, who earned 20 degrees, gold medals in...

Meet IAS officer, one of India's most educated men, who earned 20 degrees, gold medals in...![submenu-img]() Meet Maths genius, who worked with IIT, NASA, went missing suddenly, was found after years..

Meet Maths genius, who worked with IIT, NASA, went missing suddenly, was found after years..![submenu-img]() Meet Indian genius who fled to Delhi from Pakistan, worked at two IITs, awarded India’s top science award for…

Meet Indian genius who fled to Delhi from Pakistan, worked at two IITs, awarded India’s top science award for…![submenu-img]() Meet woman who cracked UPSC exam after accident, underwent 14 surgeries, still became IAS officer, she is...

Meet woman who cracked UPSC exam after accident, underwent 14 surgeries, still became IAS officer, she is...

- Videos

![submenu-img]() 5 Men Rape Australian Woman In Paris Just Days Ahead Of Olympic | Paris Olympics 2024

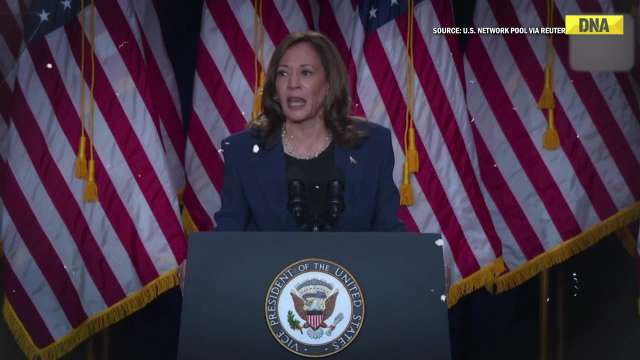

5 Men Rape Australian Woman In Paris Just Days Ahead Of Olympic | Paris Olympics 2024![submenu-img]() US Elections: 'I Know Trump's Type', Says Kamala Harris As She Launches Election Campaign

US Elections: 'I Know Trump's Type', Says Kamala Harris As She Launches Election Campaign![submenu-img]() Breaking! Nepal Plane Crash: Saurya Airlines Flight With 19 On Board Crashes In Kathmandu

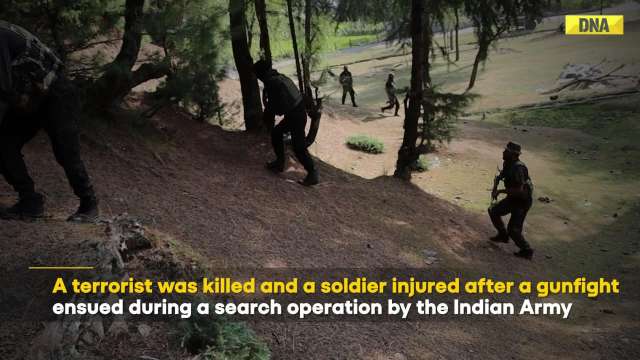

Breaking! Nepal Plane Crash: Saurya Airlines Flight With 19 On Board Crashes In Kathmandu![submenu-img]() J&K Encounter: Search Operation By Indian Army, Police Continue, 1 Terrorist Neutralised In Kupwara

J&K Encounter: Search Operation By Indian Army, Police Continue, 1 Terrorist Neutralised In Kupwara![submenu-img]() Breaking! Nepal Plane Crash: Saurya Airlines Flight With 19 On Board Crashes In Kathmandu

Breaking! Nepal Plane Crash: Saurya Airlines Flight With 19 On Board Crashes In Kathmandu

- Olympics 2024

- Photos

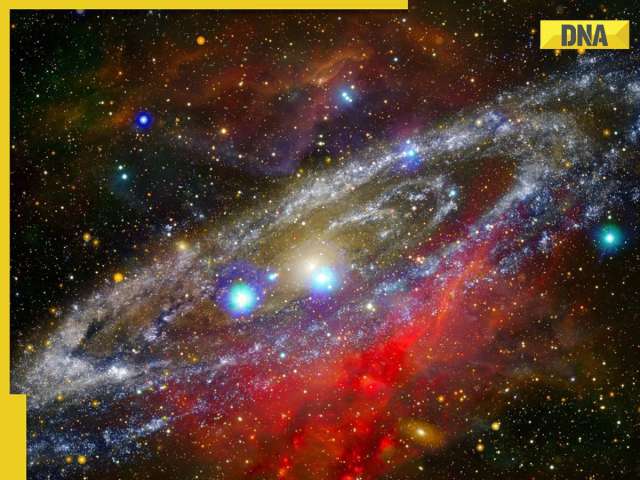

![submenu-img]() NASA images: 7 mesmerising images of space will make you fall in love with astronomy

NASA images: 7 mesmerising images of space will make you fall in love with astronomy![submenu-img]() 8 athletes with most Olympic medals

8 athletes with most Olympic medals![submenu-img]() In pics: Step inside Jalsa, Amitabh Bachchan, Jaya Bachchan's Rs 120 crore mansion with gym, jacuzzi, aesthetic decor

In pics: Step inside Jalsa, Amitabh Bachchan, Jaya Bachchan's Rs 120 crore mansion with gym, jacuzzi, aesthetic decor![submenu-img]() Remember Paul Blackthorne, Lagaan's Captain Russell? Quit films, did side roles in Hollywood, looks unrecognisable now

Remember Paul Blackthorne, Lagaan's Captain Russell? Quit films, did side roles in Hollywood, looks unrecognisable now![submenu-img]() This actor was called next superstar, bigger than Amitabh, Vinod Khanna, then lost stardom, was arrested for wife's...

This actor was called next superstar, bigger than Amitabh, Vinod Khanna, then lost stardom, was arrested for wife's...

- India

![submenu-img]() Meet man, tribal who tipped off Army about Pakistani intruders in Kargil, awaits relief from govt even after...

Meet man, tribal who tipped off Army about Pakistani intruders in Kargil, awaits relief from govt even after...![submenu-img]() Puja Khedkar case latest update: Shocking details about her parents Manorama Khedkar, Dilip Khedkar revealed

Puja Khedkar case latest update: Shocking details about her parents Manorama Khedkar, Dilip Khedkar revealed![submenu-img]() Kargil Vijay Diwas Live Updates: PM Modi visits Dras to mark 25th anniversary of Kargil Vijay Diwas

Kargil Vijay Diwas Live Updates: PM Modi visits Dras to mark 25th anniversary of Kargil Vijay Diwas![submenu-img]() Big rejig in BJP: New state chief for Bihar and Rajasthan named

Big rejig in BJP: New state chief for Bihar and Rajasthan named![submenu-img]() Mumbai rains: Schools, colleges to operate normally today, BMC urges citizens to...

Mumbai rains: Schools, colleges to operate normally today, BMC urges citizens to...

- DNA Explainers

![submenu-img]() DRDO fortifies India's skies: Phase II ballistic missile defence trial successful

DRDO fortifies India's skies: Phase II ballistic missile defence trial successful![submenu-img]() Gaza Conflict Spurs Unlikely Partners: Hamas, Fatah factions sign truce in Beijing

Gaza Conflict Spurs Unlikely Partners: Hamas, Fatah factions sign truce in Beijing![submenu-img]() Crackdowns and Crisis of Legitimacy: What lies beyond Bangladesh's apex court scaling down job quotas

Crackdowns and Crisis of Legitimacy: What lies beyond Bangladesh's apex court scaling down job quotas![submenu-img]() Area 51: Alien testing ground or enigmatic US military base?

Area 51: Alien testing ground or enigmatic US military base?![submenu-img]() Transforming India's Aerospace Industry: Budget 2024 and Beyond

Transforming India's Aerospace Industry: Budget 2024 and Beyond

- Entertainment

![submenu-img]() Aishwarya blushes singing 'Meri Saason Mein Basa Hai' in presence of Salman, old video goes viral amid divorce rumours

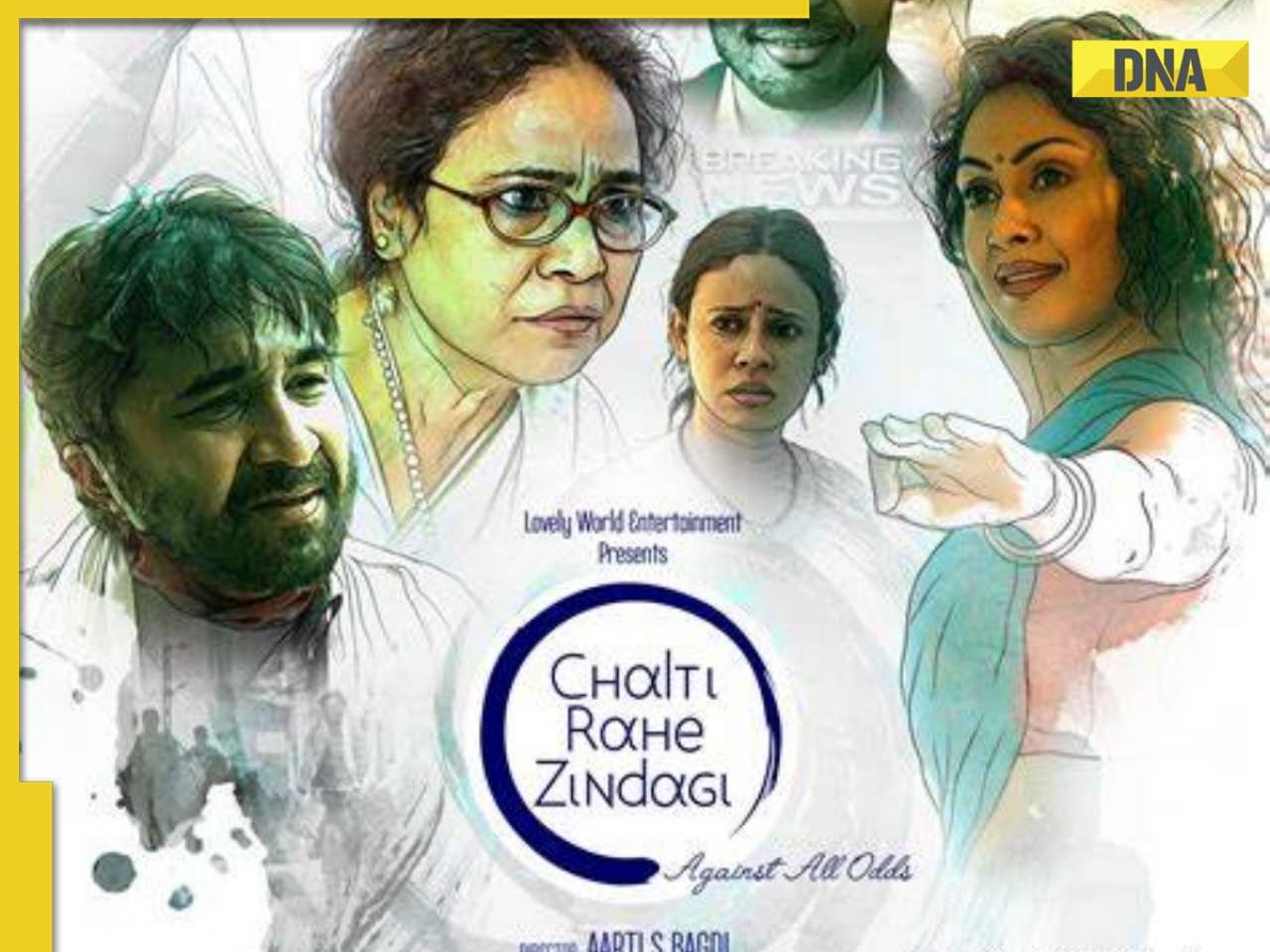

Aishwarya blushes singing 'Meri Saason Mein Basa Hai' in presence of Salman, old video goes viral amid divorce rumours![submenu-img]() Chalti Rahe Zindagi review: Siddhant Kapoor's relatable but boring lockdown drama can be skipped

Chalti Rahe Zindagi review: Siddhant Kapoor's relatable but boring lockdown drama can be skipped ![submenu-img]() 'This is nothing but...': Pahlaj Nihalani on CBFC's delay in censor certification of John Abraham's Vedaa

'This is nothing but...': Pahlaj Nihalani on CBFC's delay in censor certification of John Abraham's Vedaa ![submenu-img]() Does Janhvi Kapoor pay for social media praise, positive comments? Actress reacts, 'itna budget...'

Does Janhvi Kapoor pay for social media praise, positive comments? Actress reacts, 'itna budget...'![submenu-img]() Parineeti Chopra's cryptic post about 'throwing toxic people out of life' scares fans: 'Stop living for...'

Parineeti Chopra's cryptic post about 'throwing toxic people out of life' scares fans: 'Stop living for...'

- Viral News

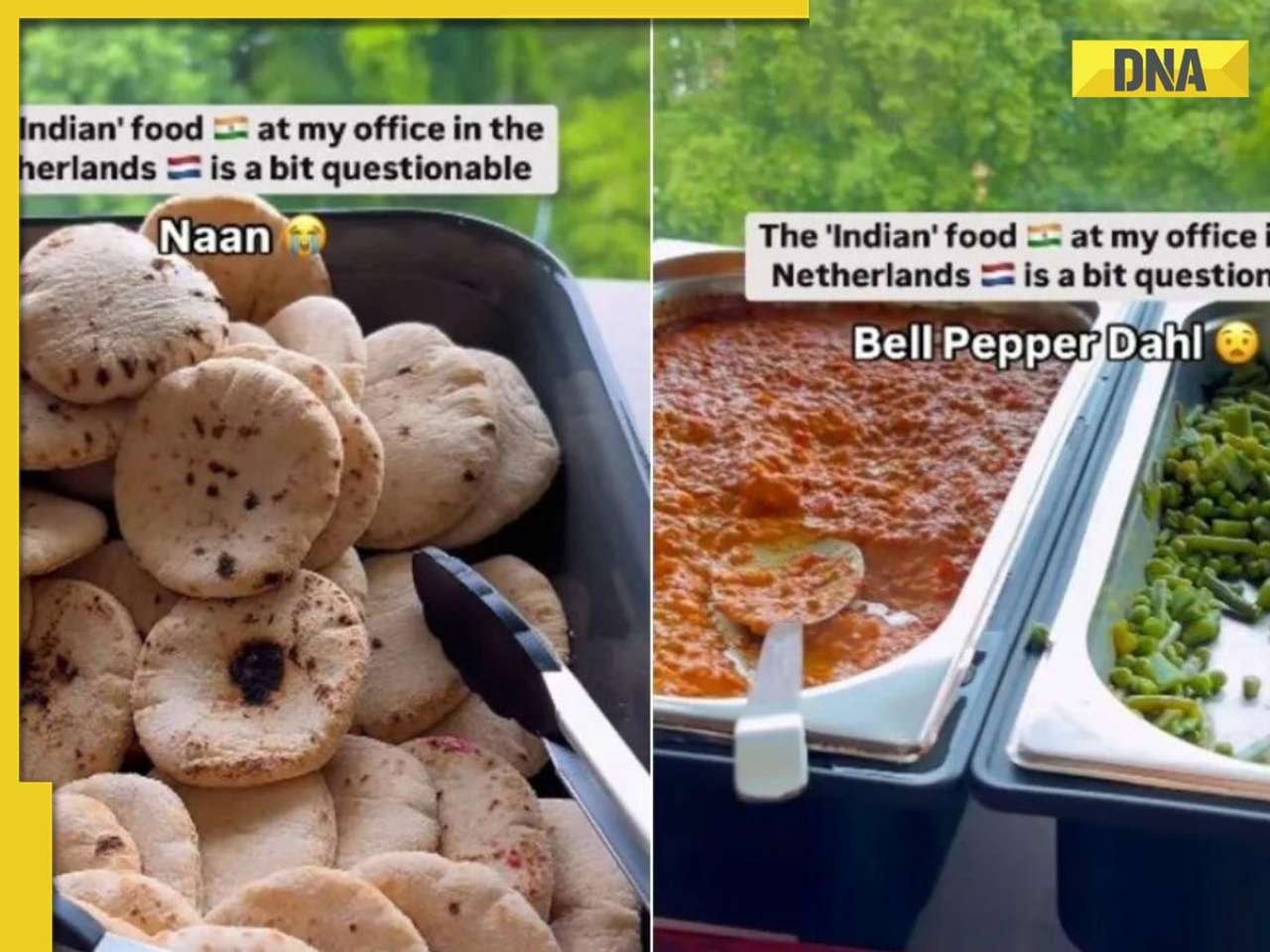

![submenu-img]() Watch video: 'Questionable' Indian food served to employees in Dutch office; Internet reacts

Watch video: 'Questionable' Indian food served to employees in Dutch office; Internet reacts![submenu-img]() This small nation is most important country in world, plays huge role in shaping geopolitics, it is...

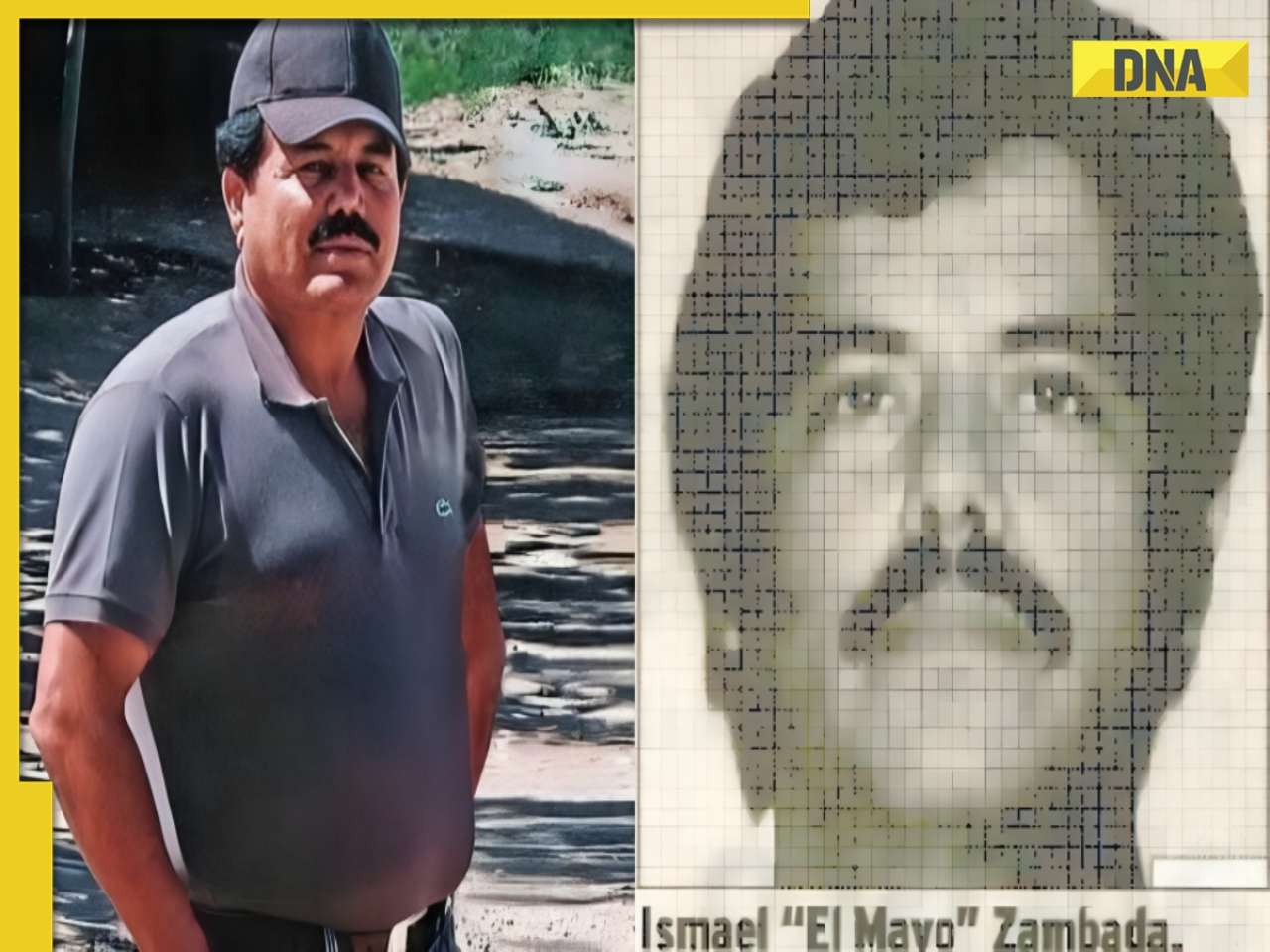

This small nation is most important country in world, plays huge role in shaping geopolitics, it is...![submenu-img]() El Mayo in US custody: Who is Mexican drug lord Ismael Zambada, Sinaloa cartel leader arrested with El Chapo's son?

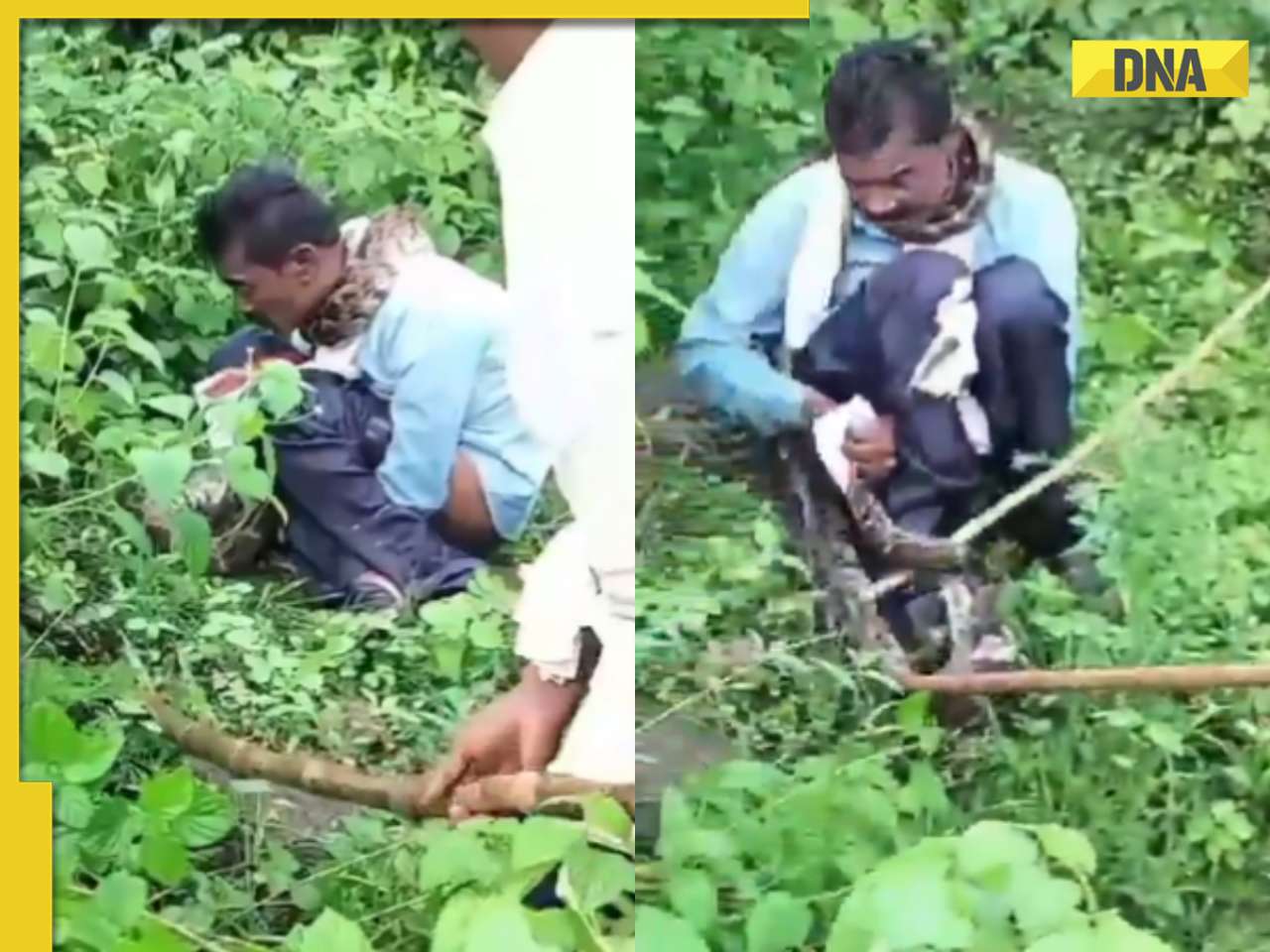

El Mayo in US custody: Who is Mexican drug lord Ismael Zambada, Sinaloa cartel leader arrested with El Chapo's son?![submenu-img]() Viral video: 15-foot python attacks and nearly swallows Jabalpur man, here's how locals save him, watch

Viral video: 15-foot python attacks and nearly swallows Jabalpur man, here's how locals save him, watch![submenu-img]() 'Anant knows everything': Akash Ambani, Isha Ambani tell Amitabh Bachchan as…

'Anant knows everything': Akash Ambani, Isha Ambani tell Amitabh Bachchan as…

)

)

)

)

)

)

)

)

)

)

)

)

)

)

)

)

)

)

)

)

)