The Consumer Electronics Show (CES)--the biggest annual technology showcase on the planet--kicked off in Las Vegas a few hours ago. From next-gen displays to wearable computing to the every type of consumer product that will have some form of compute power and Web connectivity, this week will see some of the most bleeding-edge technologies being unveiled.

Kicking off the event was Jen-Hsun Huang, President and CEO of Nvidia -- the company behind all those pixel-pushing gaming systems and graphically advanced mobile processors. Going by what was unveiled, this year should witness an onslaught of connected systems; not just with traditional computing devices but also in cars and consumer objects.

At the centre of Huang’s keynote speech was their new Tegra X1 Graphics Processing Unit: a 64-bit mobile processor that happens to be the first 1 Teraflop mobile chip. The implications of this kind of computing horsepower became evident during the speech and his demonstrations, where he showcased the future of in-car control, navigation and entertainment systems, based on this new chip. It appears to be a processing behemoth, with a 256-core GPU (codenamed Maxwell) and an 8-core CPU, which together facilitate the highest levels of visual computing ability, which they hope will revolutionise cars and driving in the near term.

This new processor forms the foundation of their newly-announced Drive CX platform--a system that uses two Tegra X1 chips that in tandem deliver 2.3 teraflops of mobile supercomputing power, can process 12 real-time camera inputs, and uses a revolutionary new neural network based vision system that enables the system to train itself autonomously and learn visual cues when driving. Nvidia ostensible already has their graphics chips in 6.2 million cars including ones from Audi, BMW, Porsche and Tesla. This new system will enable upcoming cars to recognize everything from road signs to pedestrians to other cars--even the specific type of vehicle.

Apart from the amped up visual aspect of this new digital cockpit--it can render a range of ‘textures’ like aluminium, copper, even bamboo, for the instrumentation cluster dials--the system also uses a type of visual processing called surround vision that processes live feeds from several fisheye cameras around the car, and stitches imagery together to make sense of the immediate environment for autonomous driving decisions.

The Drive CX system utilises neural networks for image processing, leveraging the power of their GPUs for advanced image processing for recognising details and features in images. The system is able to, in real time, recognize multiple features in driving environments, including partially obscured pedestrians and objects and can even detect special traffic instances such as the flashing lights of ambulances or school buses. The neural learning system is able to ‘teach itself’ and refine recognition accuracy all on its own. It is a deep learning chip that learns continuously: in case of any incorrect recognitions, it communicates with its connected image repository in the cloud, makes adjustments to itself, and every recognition after that is improved. This ability has enabled its visual systems to evolve at a hugely quicker rate--what used to take several months of machine training can now happen in days and weeks.

Also part of the this other-worldly car-of-the-future demonstration was the ability of the vehicle to enter a parking lot, drive around, find an empty spot and park itself. And with a paired smartphone, the car will be able to find its way back to you--like an automated valet.

Stay tuned as we bring you the best of CES 2015 right here, and find all of the latest updates on our Twitter feed on #DNATech.

![submenu-img]() Anushka Sharma, Virat Kohli officially reveal newborn son Akaay's face but only to...

Anushka Sharma, Virat Kohli officially reveal newborn son Akaay's face but only to...![submenu-img]() Elon Musk's Tesla to fire more than 14000 employees, preparing company for...

Elon Musk's Tesla to fire more than 14000 employees, preparing company for...![submenu-img]() Meet man, who cracked UPSC exam, then quit IAS officer's post to become monk due to...

Meet man, who cracked UPSC exam, then quit IAS officer's post to become monk due to...![submenu-img]() How Imtiaz Ali failed Amar Singh Chamkila, and why a good film can also be a bad biopic | Opinion

How Imtiaz Ali failed Amar Singh Chamkila, and why a good film can also be a bad biopic | Opinion![submenu-img]() Ola S1 X gets massive price cut, electric scooter price now starts at just Rs…

Ola S1 X gets massive price cut, electric scooter price now starts at just Rs…![submenu-img]() DNA Verified: Is CAA an anti-Muslim law? Centre terms news report as 'misleading'

DNA Verified: Is CAA an anti-Muslim law? Centre terms news report as 'misleading'![submenu-img]() DNA Verified: Lok Sabha Elections 2024 to be held on April 19? Know truth behind viral message

DNA Verified: Lok Sabha Elections 2024 to be held on April 19? Know truth behind viral message![submenu-img]() DNA Verified: Modi govt giving students free laptops under 'One Student One Laptop' scheme? Know truth here

DNA Verified: Modi govt giving students free laptops under 'One Student One Laptop' scheme? Know truth here![submenu-img]() DNA Verified: Shah Rukh Khan denies reports of his role in release of India's naval officers from Qatar

DNA Verified: Shah Rukh Khan denies reports of his role in release of India's naval officers from Qatar![submenu-img]() DNA Verified: Is govt providing Rs 1.6 lakh benefit to girls under PM Ladli Laxmi Yojana? Know truth

DNA Verified: Is govt providing Rs 1.6 lakh benefit to girls under PM Ladli Laxmi Yojana? Know truth![submenu-img]() In pics: Rajinikanth, Kamal Haasan, Mani Ratnam, Suriya attend S Shankar's daughter Aishwarya's star-studded wedding

In pics: Rajinikanth, Kamal Haasan, Mani Ratnam, Suriya attend S Shankar's daughter Aishwarya's star-studded wedding![submenu-img]() In pics: Sanya Malhotra attends opening of school for neurodivergent individuals to mark World Autism Month

In pics: Sanya Malhotra attends opening of school for neurodivergent individuals to mark World Autism Month![submenu-img]() Remember Jibraan Khan? Shah Rukh's son in Kabhi Khushi Kabhie Gham, who worked in Brahmastra; here’s how he looks now

Remember Jibraan Khan? Shah Rukh's son in Kabhi Khushi Kabhie Gham, who worked in Brahmastra; here’s how he looks now![submenu-img]() From Bade Miyan Chote Miyan to Aavesham: Indian movies to watch in theatres this weekend

From Bade Miyan Chote Miyan to Aavesham: Indian movies to watch in theatres this weekend ![submenu-img]() Streaming This Week: Amar Singh Chamkila, Premalu, Fallout, latest OTT releases to binge-watch

Streaming This Week: Amar Singh Chamkila, Premalu, Fallout, latest OTT releases to binge-watch![submenu-img]() DNA Explainer: What is Israel's Arrow-3 defence system used to intercept Iran's missile attack?

DNA Explainer: What is Israel's Arrow-3 defence system used to intercept Iran's missile attack?![submenu-img]() DNA Explainer: How Iranian projectiles failed to breach iron-clad Israeli air defence

DNA Explainer: How Iranian projectiles failed to breach iron-clad Israeli air defence![submenu-img]() DNA Explainer: What is India's stand amid Iran-Israel conflict?

DNA Explainer: What is India's stand amid Iran-Israel conflict?![submenu-img]() DNA Explainer: Why Iran attacked Israel with hundreds of drones, missiles

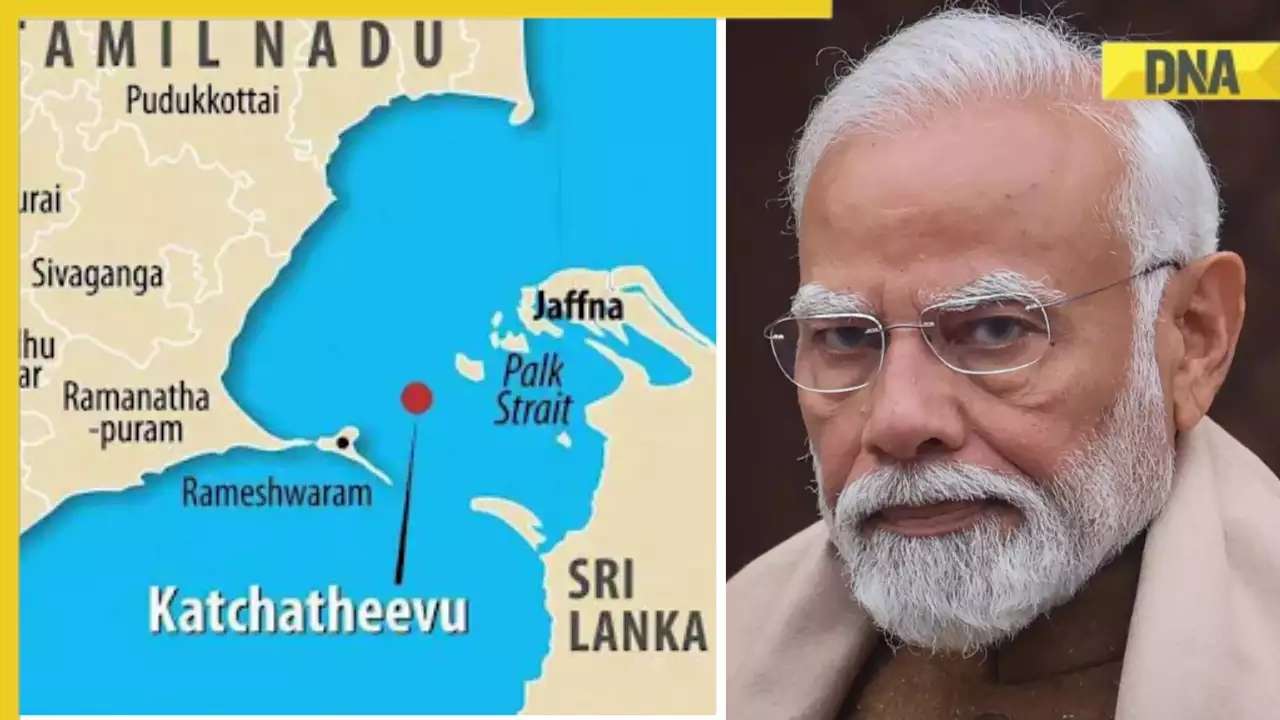

DNA Explainer: Why Iran attacked Israel with hundreds of drones, missiles![submenu-img]() What is Katchatheevu island row between India and Sri Lanka? Why it has resurfaced before Lok Sabha Elections 2024?

What is Katchatheevu island row between India and Sri Lanka? Why it has resurfaced before Lok Sabha Elections 2024?![submenu-img]() Anushka Sharma, Virat Kohli officially reveal newborn son Akaay's face but only to...

Anushka Sharma, Virat Kohli officially reveal newborn son Akaay's face but only to...![submenu-img]() How Imtiaz Ali failed Amar Singh Chamkila, and why a good film can also be a bad biopic | Opinion

How Imtiaz Ali failed Amar Singh Chamkila, and why a good film can also be a bad biopic | Opinion![submenu-img]() Aamir Khan files FIR after video of him 'promoting particular party' circulates ahead of Lok Sabha elections: 'We are..'

Aamir Khan files FIR after video of him 'promoting particular party' circulates ahead of Lok Sabha elections: 'We are..'![submenu-img]() Henry Cavill and girlfriend Natalie Viscuso expecting their first child together, actor says 'I'm very excited'

Henry Cavill and girlfriend Natalie Viscuso expecting their first child together, actor says 'I'm very excited'![submenu-img]() This actress was thrown out of films, insulted for her looks, now owns private jet, sea-facing bungalow worth Rs...

This actress was thrown out of films, insulted for her looks, now owns private jet, sea-facing bungalow worth Rs...![submenu-img]() IPL 2024: Travis Head, Heinrich Klaasen power SRH to 25 run win over RCB

IPL 2024: Travis Head, Heinrich Klaasen power SRH to 25 run win over RCB![submenu-img]() KKR vs RR, IPL 2024: Predicted playing XI, live streaming details, weather and pitch report

KKR vs RR, IPL 2024: Predicted playing XI, live streaming details, weather and pitch report![submenu-img]() KKR vs RR IPL 2024 Dream11 prediction: Fantasy cricket tips for Kolkata Knight Riders vs Rajasthan Royals

KKR vs RR IPL 2024 Dream11 prediction: Fantasy cricket tips for Kolkata Knight Riders vs Rajasthan Royals![submenu-img]() RCB vs SRH, IPL 2024: Predicted playing XI, live streaming details, weather and pitch report

RCB vs SRH, IPL 2024: Predicted playing XI, live streaming details, weather and pitch report![submenu-img]() IPL 2024: Rohit Sharma's century goes in vain as CSK beat MI by 20 runs

IPL 2024: Rohit Sharma's century goes in vain as CSK beat MI by 20 runs![submenu-img]() Watch viral video: Isha Ambani, Shloka Mehta, Anant Ambani spotted at Janhvi Kapoor's home

Watch viral video: Isha Ambani, Shloka Mehta, Anant Ambani spotted at Janhvi Kapoor's home![submenu-img]() This diety holds special significance for Mukesh Ambani, Nita Ambani, Isha Ambani, Akash, Anant , it is located in...

This diety holds special significance for Mukesh Ambani, Nita Ambani, Isha Ambani, Akash, Anant , it is located in...![submenu-img]() Swiggy delivery partner steals Nike shoes kept outside flat, netizens react, watch viral video

Swiggy delivery partner steals Nike shoes kept outside flat, netizens react, watch viral video![submenu-img]() iPhone maker Apple warns users in India, other countries of this threat, know alert here

iPhone maker Apple warns users in India, other countries of this threat, know alert here![submenu-img]() Old Digi Yatra app will not work at airports, know how to download new app

Old Digi Yatra app will not work at airports, know how to download new app

)

)

)

)

)

)

)

)

)

)

)