- Home

- Latest News

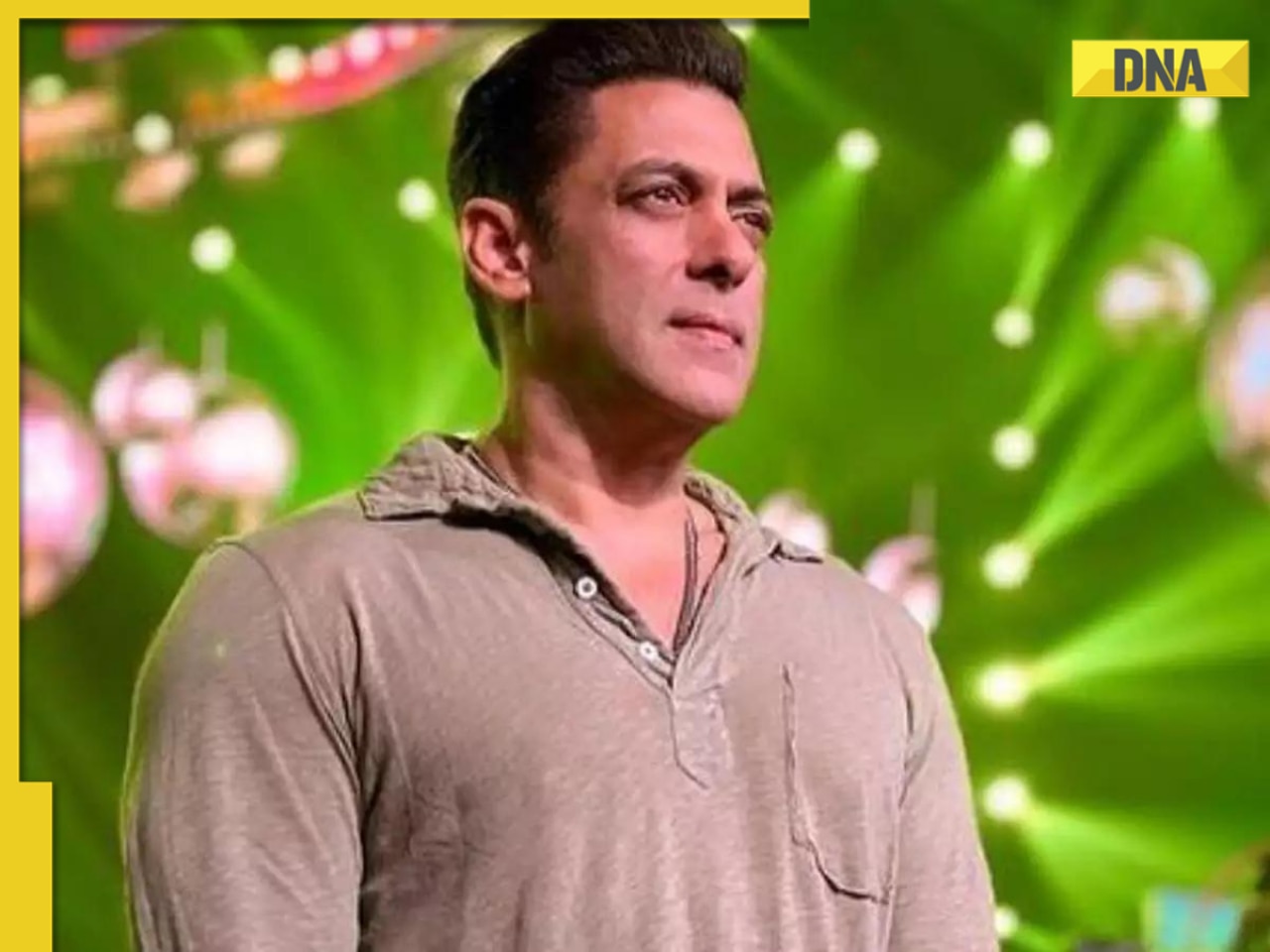

![submenu-img]() Firing at Salman Khan's house: Shooter identified as Gurugram criminal 'involved in multiple killings', probe begins

Firing at Salman Khan's house: Shooter identified as Gurugram criminal 'involved in multiple killings', probe begins![submenu-img]() Salim Khan breaks silence after firing outside Salman Khan's Mumbai house: 'They want...'

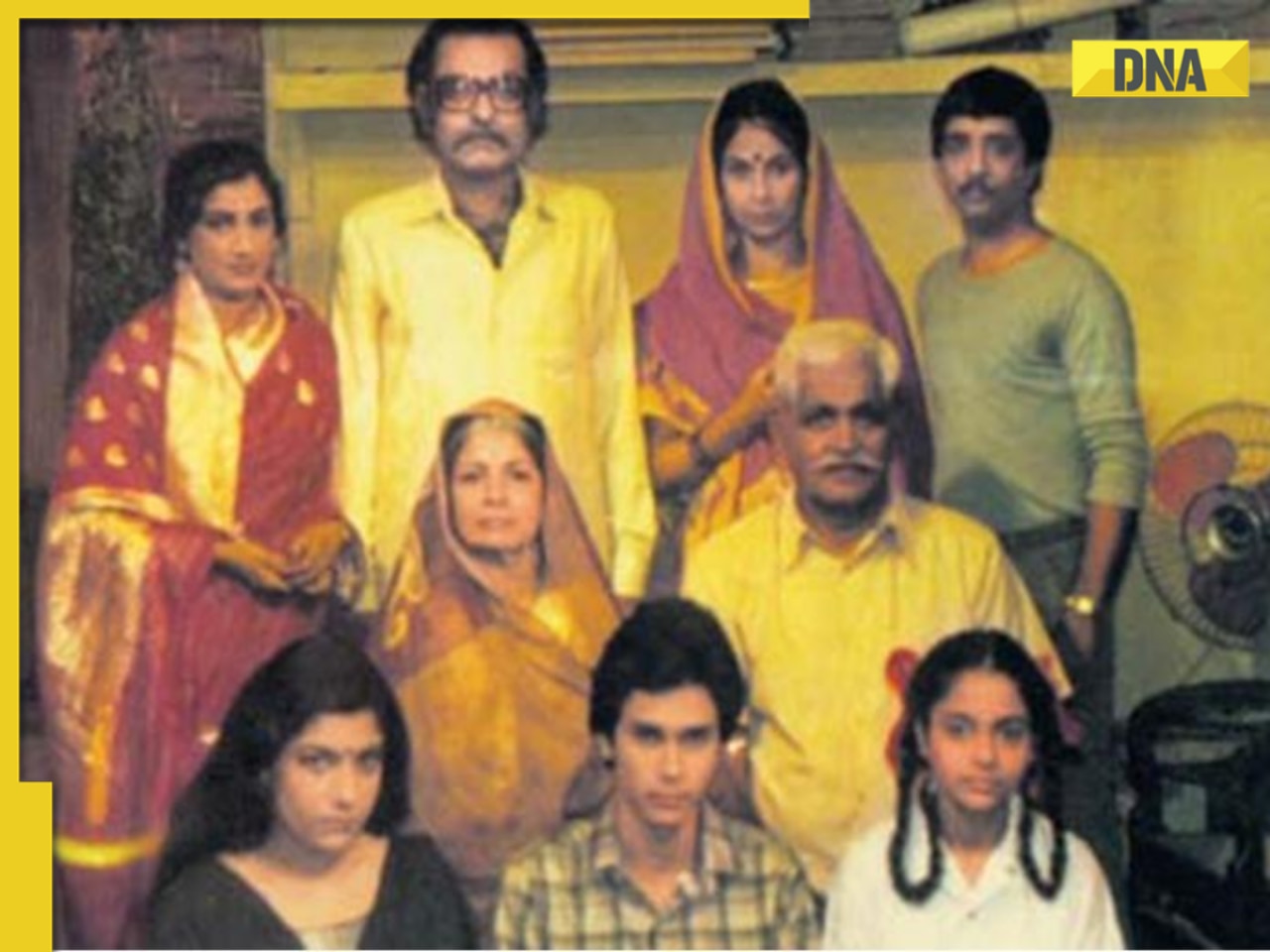

Salim Khan breaks silence after firing outside Salman Khan's Mumbai house: 'They want...'![submenu-img]() India's first TV serial had 5 crore viewers; higher TRP than Naagin, Bigg Boss combined; it's not Ramayan, Mahabharat

India's first TV serial had 5 crore viewers; higher TRP than Naagin, Bigg Boss combined; it's not Ramayan, Mahabharat![submenu-img]() Vellore Lok Sabha constituency: Check polling date, candidates list, past election results

Vellore Lok Sabha constituency: Check polling date, candidates list, past election results![submenu-img]() Meet NEET-UG topper who didn't take admission in AIIMS Delhi despite scoring AIR 1 due to...

Meet NEET-UG topper who didn't take admission in AIIMS Delhi despite scoring AIR 1 due to...

- Election 2024

- Webstory

- IPL 2024

- DNA Verified

![submenu-img]() DNA Verified: Is CAA an anti-Muslim law? Centre terms news report as 'misleading'

DNA Verified: Is CAA an anti-Muslim law? Centre terms news report as 'misleading'![submenu-img]() DNA Verified: Lok Sabha Elections 2024 to be held on April 19? Know truth behind viral message

DNA Verified: Lok Sabha Elections 2024 to be held on April 19? Know truth behind viral message![submenu-img]() DNA Verified: Modi govt giving students free laptops under 'One Student One Laptop' scheme? Know truth here

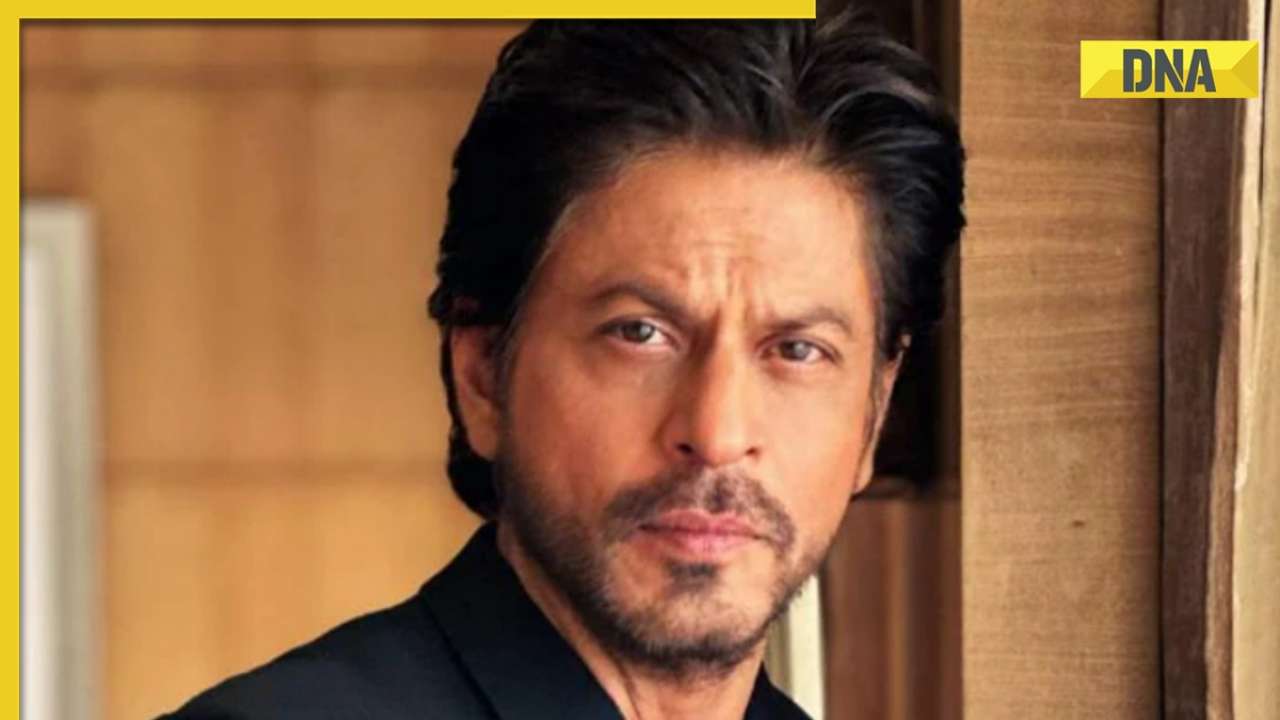

DNA Verified: Modi govt giving students free laptops under 'One Student One Laptop' scheme? Know truth here![submenu-img]() DNA Verified: Shah Rukh Khan denies reports of his role in release of India's naval officers from Qatar

DNA Verified: Shah Rukh Khan denies reports of his role in release of India's naval officers from Qatar![submenu-img]() DNA Verified: Is govt providing Rs 1.6 lakh benefit to girls under PM Ladli Laxmi Yojana? Know truth

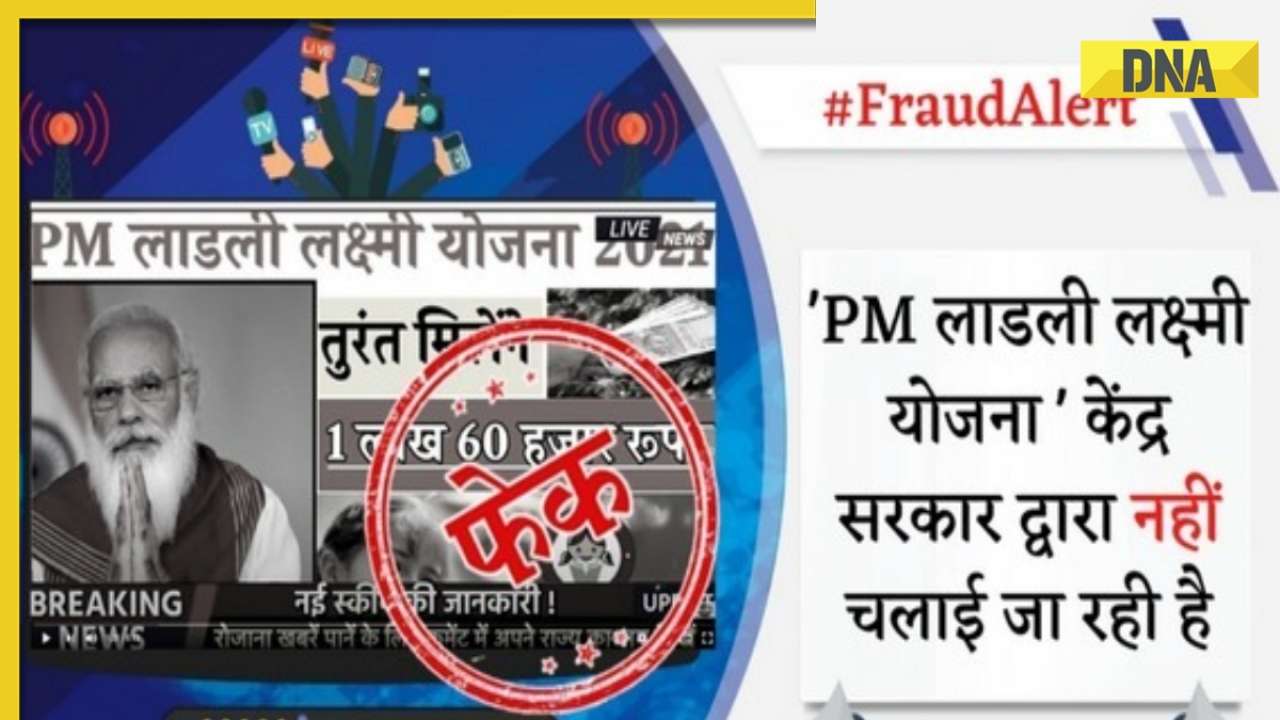

DNA Verified: Is govt providing Rs 1.6 lakh benefit to girls under PM Ladli Laxmi Yojana? Know truth

- DNA Her

- Photos

![submenu-img]() Remember Jibraan Khan? Shah Rukh's son in Kabhi Khushi Kabhie Gham, who worked in Brahmastra; here’s how he looks now

Remember Jibraan Khan? Shah Rukh's son in Kabhi Khushi Kabhie Gham, who worked in Brahmastra; here’s how he looks now![submenu-img]() From Bade Miyan Chote Miyan to Aavesham: Indian movies to watch in theatres this weekend

From Bade Miyan Chote Miyan to Aavesham: Indian movies to watch in theatres this weekend ![submenu-img]() Streaming This Week: Amar Singh Chamkila, Premalu, Fallout, latest OTT releases to binge-watch

Streaming This Week: Amar Singh Chamkila, Premalu, Fallout, latest OTT releases to binge-watch![submenu-img]() Remember Tanvi Hegde? Son Pari's Fruity who has worked with Shahid Kapoor, here's how gorgeous she looks now

Remember Tanvi Hegde? Son Pari's Fruity who has worked with Shahid Kapoor, here's how gorgeous she looks now![submenu-img]() Remember Kinshuk Vaidya? Shaka Laka Boom Boom star, who worked with Ajay Devgn; here’s how dashing he looks now

Remember Kinshuk Vaidya? Shaka Laka Boom Boom star, who worked with Ajay Devgn; here’s how dashing he looks now

- Explainers

![submenu-img]() DNA Explainer: How Iranian projectiles failed to breach iron-clad Israeli air defence

DNA Explainer: How Iranian projectiles failed to breach iron-clad Israeli air defence![submenu-img]() DNA Explainer: What is India's stand amid Iran-Israel conflict?

DNA Explainer: What is India's stand amid Iran-Israel conflict?![submenu-img]() DNA Explainer: Why Iran attacked Israel with hundreds of drones, missiles

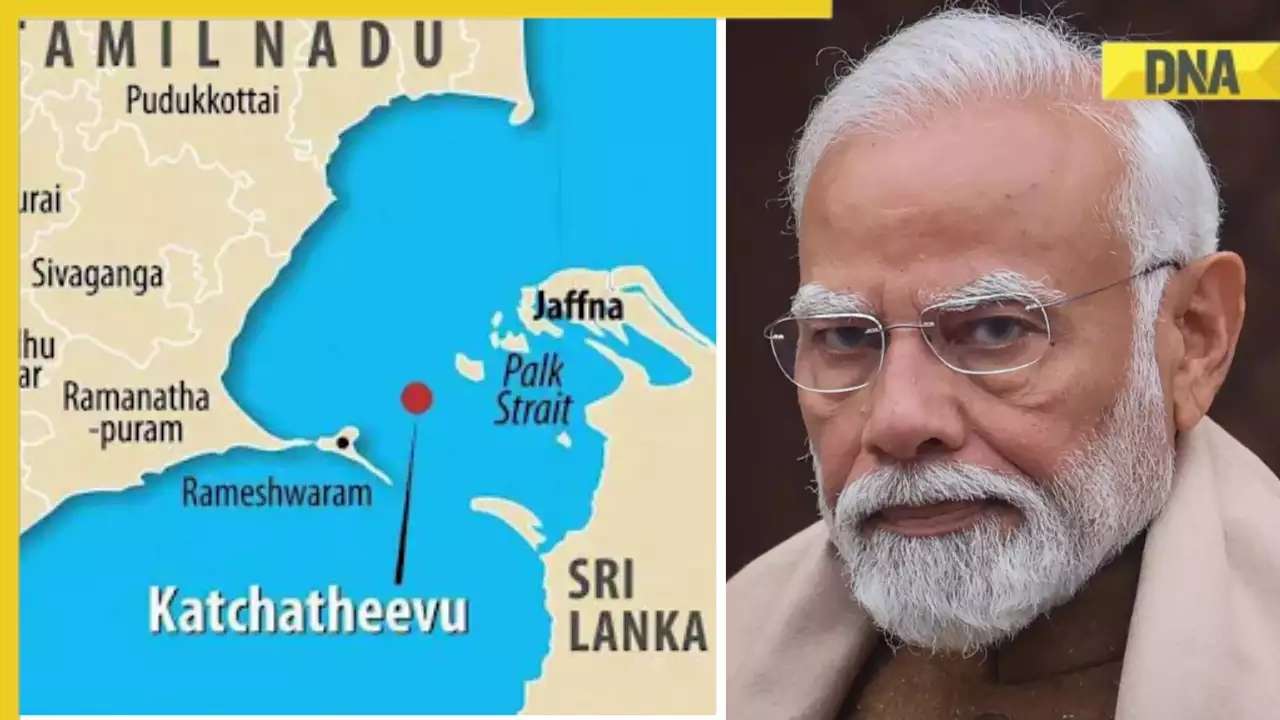

DNA Explainer: Why Iran attacked Israel with hundreds of drones, missiles![submenu-img]() What is Katchatheevu island row between India and Sri Lanka? Why it has resurfaced before Lok Sabha Elections 2024?

What is Katchatheevu island row between India and Sri Lanka? Why it has resurfaced before Lok Sabha Elections 2024?![submenu-img]() DNA Explainer: Reason behind caused sudden storm in West Bengal, Assam, Manipur

DNA Explainer: Reason behind caused sudden storm in West Bengal, Assam, Manipur

- Entertainment

![submenu-img]() Firing at Salman Khan's house: Shooter identified as Gurugram criminal 'involved in multiple killings', probe begins

Firing at Salman Khan's house: Shooter identified as Gurugram criminal 'involved in multiple killings', probe begins![submenu-img]() Salim Khan breaks silence after firing outside Salman Khan's Mumbai house: 'They want...'

Salim Khan breaks silence after firing outside Salman Khan's Mumbai house: 'They want...'![submenu-img]() India's first TV serial had 5 crore viewers; higher TRP than Naagin, Bigg Boss combined; it's not Ramayan, Mahabharat

India's first TV serial had 5 crore viewers; higher TRP than Naagin, Bigg Boss combined; it's not Ramayan, Mahabharat![submenu-img]() This film has earned Rs 1000 crore before release, beaten Animal, Pathaan, Gadar 2 already; not Kalki 2898 AD, Singham 3

This film has earned Rs 1000 crore before release, beaten Animal, Pathaan, Gadar 2 already; not Kalki 2898 AD, Singham 3![submenu-img]() This Bollywood star was intimated by co-stars, abused by director, worked as AC mechanic, later gave Rs 2000-crore hit

This Bollywood star was intimated by co-stars, abused by director, worked as AC mechanic, later gave Rs 2000-crore hit

- Sports

![submenu-img]() IPL 2024: Rohit Sharma's century goes in vain as CSK beat MI by 20 runs

IPL 2024: Rohit Sharma's century goes in vain as CSK beat MI by 20 runs![submenu-img]() RCB vs SRH IPL 2024 Dream11 prediction: Fantasy cricket tips for Royal Challengers Bengaluru vs Sunrisers Hyderabad

RCB vs SRH IPL 2024 Dream11 prediction: Fantasy cricket tips for Royal Challengers Bengaluru vs Sunrisers Hyderabad ![submenu-img]() IPL 2024: Phil Salt, Mitchell Starc power Kolkata Knight Riders to 8-wicket win over Lucknow Super Giants

IPL 2024: Phil Salt, Mitchell Starc power Kolkata Knight Riders to 8-wicket win over Lucknow Super Giants![submenu-img]() IPL 2024: Why are Lucknow Super Giants wearing green and maroon jersey against Kolkata Knight Riders at Eden Gardens?

IPL 2024: Why are Lucknow Super Giants wearing green and maroon jersey against Kolkata Knight Riders at Eden Gardens?![submenu-img]() IPL 2024: Shimron Hetmyer, Yashasvi Jaiswal power RR to 3 wicket win over PBKS

IPL 2024: Shimron Hetmyer, Yashasvi Jaiswal power RR to 3 wicket win over PBKS

- Viral News

![submenu-img]() Watch viral video: Isha Ambani, Shloka Mehta, Anant Ambani spotted at Janhvi Kapoor's home

Watch viral video: Isha Ambani, Shloka Mehta, Anant Ambani spotted at Janhvi Kapoor's home![submenu-img]() This diety holds special significance for Mukesh Ambani, Nita Ambani, Isha Ambani, Akash, Anant , it is located in...

This diety holds special significance for Mukesh Ambani, Nita Ambani, Isha Ambani, Akash, Anant , it is located in...![submenu-img]() Swiggy delivery partner steals Nike shoes kept outside flat, netizens react, watch viral video

Swiggy delivery partner steals Nike shoes kept outside flat, netizens react, watch viral video![submenu-img]() iPhone maker Apple warns users in India, other countries of this threat, know alert here

iPhone maker Apple warns users in India, other countries of this threat, know alert here![submenu-img]() Old Digi Yatra app will not work at airports, know how to download new app

Old Digi Yatra app will not work at airports, know how to download new app

)

)

)

)

)

)